Porque no los dos?

You’re against computers being able to understand language, video, and images?

They don’t understand though. A lot of AI evangelists seem to smooth over that detail, it is a LLM not anything that “understands” language, video nor images.

There are uses for these kinds of models like semi-automating analysing large pools of data, but even in a socialist society the resources that allocated to do it like it is currently is completely unsustainable.

They don’t understand though. A lot of AI evangelists seem to smooth over that detail, it is a LLM not anything that “understands” language, video nor images.

We’re into the Chinese Room problem. “Understand” is not a well-defined or measurable thing. I don’t see how it could be measured except from looking at inputs&outputs.

Does this mean that my TI-84 calculator was actually an AI since it could solve equations I put into it? Or Wolfram Alpha? Or a speed camera? These are all able to read external inputs to produce an output. At which point does your line go, because the current technology is nowhere near where mine goes.

We are currently ruining the biosphere so that some people might earn a lot of money by being able to lay off workers. If you remove this integral part to what “AI” is and all other negative externalities of course it will look better, but not all of the externalities are tied to the capitalist mode of production. Economies and resource allocation would still be a thing without capitalism, it isn’t like everything magically becomes good.

A choose your own adventure novel is an AI because you feed it a set of inputs (page numbers) and it feeds you a set of outputs (a dynamic story).

“Understand” is not a well-defined or measurable thing.

So why attribute it to an LLM in the first place then? All of the LLMs are just floating point numbers being multiplied and added inside a digital computer, the onus is on the AI bros to show what kind of floating point multiplication is real “understanding”.

But it’s inherently impossible to “show” anything except inputs&outputs (including for a biological system).

What are you using the word “real” to mean, and is it aloof from the measurable behaviour of the system?

You seem to be using a mental model that there’s

-

A: the measurable inputs & outputs of the system

-

B: the “real understanding”, which is separate

How can you prove B exists if it’s not measurable? You say there is an “onus” to do so. I don’t agree that such an onus exists.

This is exactly the Chinese Room paper. ‘Understand’ is usually understood in a functionalist way.

But, ironically, the Chinese Room Argument you’re bringing up supports what others are saying that LLMs do not ‘understand’ anything.

It seems to me like you are establishing ‘understanding’ with a functionalist meaning to be able to say that input/output is equivalent to understanding in order to say the measurable process in itself shows ‘understanding’. But that’s not what Searle, and seemingly the others here, seem to mean by ‘understanding’. As Searle argues, it is not purely the syntactic manipulation in question but the semantic. In other words, these LLMs do not “know” the information they provide, they are just repeating based off the input/output process with which they were programmed. LLMs do not project or internalize any meaning to the input/output process. If they had some reflexive consciousness and any ‘understanding’, then they could have critically approach the meaning of the information in order to assess its validity against facts rather than just naïvely proclaiming that cockroaches got their name because they like to crawl into penises at night. Do you believe LLMs are conscious?

How can you prove B exists if it’s not measurable?

Because I’ve felt it, I’ve felt how understanding feels, because ultimately understanding is a conscious experience within a mind, you cannot define understanding without referencing conscious experience, you cannot possibly define it only in terms of behavior or function. So either you have to concede that every floating point multiplication in a digital chip “feels like something” at some level or you show what specific kind of floating point multiplication does.

-

I don’t see how it could be measured except from looking at inputs&outputs.

Okay, then consider that when you input something into an LLM and regenerate the responses a few times, it can come up with outputs of completely opposite (and equally incorrect) meaning, proving that it does not have any functional understanding of anything and instead simply outputs random noise that sometimes looks similar to what one would output if they did understand the content in question.

Right. Like if I were talking to someone in total delirium and their responses were random and not a good fit for the question.

LLMs are not like that.

You don’t seem to have read my comment. Please address what I said.

when you input something into an LLM and regenerate the responses a few times, it can come up with outputs of completely opposite (and equally incorrect) meaning

Can you paste an example of this error?

I’m against the current iteration of the buzzword that involves a bunch of wasted money being dumped into something that also generates a ton of energy use to get things somewhat correct rather than having it go towards actual needs we have affecting humanity.

Fusion’s close to a core need of humanity.

Wait, you think fusion will be developed thanks to AI?

You don’t?

No, I haven’t seen any major technological breakthroughs coming from language models, other than language models themselves. Have you?

No. You want to suddenly change the subject to language models?

I am against the marketing buzz that is pretending (lying) that computers can understand language, video, and images, yes.

I am not against actual AI but it does not exist yet

They can functionally understand a good portion of it.

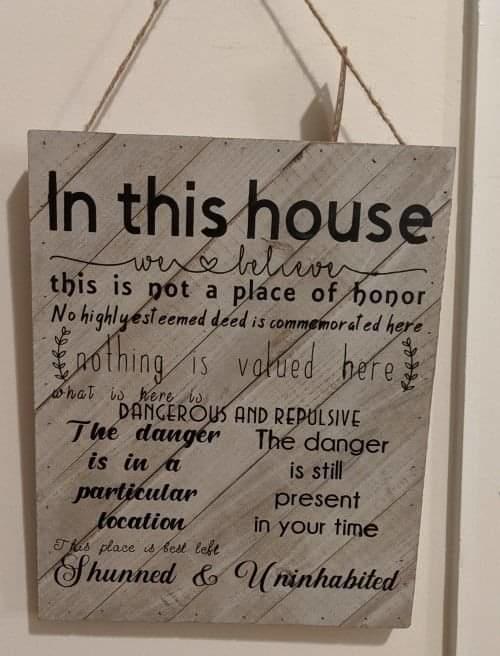

e.g. I can input a meme plus the words “explain this meme” and it can output an explanation.

deleted by creator

No, i’m pretty sure im also against AI. Im against artist not being paid for their work and being replaced by subpar machine learning regurgitating their art without any sense

You’re literally talking about capitalism fucking over the artist here. There no reason the AI can’t be helping you do the boring shit in your work faster and why it shouldn’t only benifit you directly.

It can also be used to improve your skills as an artist. For example, there’s a music theory plugin called Scaler 2 which uses AI. You can run recordings through Scaler and it will spit out the chords and key the songs are in. I’ve been using it to learn music theory. I’m not sure if any of y’all have tried learning music theory in a formal setting but a lot of teachers are incredibly pretentious, especially if you tell them you’re a guitarist or want to make electronic dance music. You could technically use it to write entire songs but those would be boring and lifeless.

yeah its useful to do dumb boring work. not offload the entire creative process to it. Its like saying photoshop ruined photography. Rather I would argue it created an entire sub-genre of photos.

We shouldnt lose the tool because of other tools trying to yuck our yum.

that use for llms was cooked up by capitalism

it could very well be just another tool to assist artists

Also what’s inherently wrong with art being generated by a computer? Not every piece of art made by a human is this unique, incredibly creative never before seen thing nor it needs to be as such, in fact most human made art is just rehashing of previous things.

This is excellent, thanks for sharing

That’s capitalism

Capitalism is the economic model where the wealth created by the workers is owned by the people o whom the tools and resources required to created said wealth and not the workers who put in the work to create it.

Here is a comic that might help you understand:

When you say paid…

The treat printers are tools. Tools can be useful.

Tools can also be horrid when wielded (or stanned) by tools.

Keep in mind the staggering energy costs and carbon waste involved with the widespread use of this tool. When tools expect, even demand the tool to be used everywhere at an increasing rate, they’re being especially horrid tools.

It’s worth noting that the inefficiency isn’t inherent in this tool. It’s largely an artifact of the tech being new and used in naive ways. This is a good example of a massive improvement from a relatively straight forward optimization https://lmsys.org/blog/2024-07-01-routellm/

Lots of efficiency improvements are possible for lots of tools but they often don’t happen on a sufficiently large scale because of capitalism. It’s why we have “just another lane, bro” stroads instead of viable mass transit across most of Burgerland, for example.

I highly doubt bazinga-Americans, from ruling class billionaires to their stans and glazers, are that interested in efficiency when they feverishly demand ascended techno-gods to emerge from sufficiently large treat printer databases. One such glazer is even in this thread, right now.

In this case, I think we are going to see such improvements because there’s a direct benefit to companies operating LLMs to save costs. It’s also worth noting that a lot of the improvements are happening in open source space, and I firmly believe that’s how this tech should be developed in the first place.

I find complaining about the fact that generative models exist isn’t really productive. There’s no putting toothpaste back in the tube at this point. However, it is valuable to have discussions regarding how this tech should be developed and used going forward.

One more thing: you may want to look at the numbers for just how vastly extensive and wasteful current “AI” usage is among tech companies and how much more they intend to expand its use, whether people ask for it or not, pretty much everywhere.

If you haven’t heard of the Jevons Paradox, it also helps explain why increasingly efficient gasoline engines haven’t actually reduced overall carbon waste because more and more of those more efficient gasoline engines were used all the while.

I’m well aware of Jevons Paradox, however what it says is that we’ll always find new use for energy surplus. If it wasn’t LLMs then it would just be something else. There’s nothing uniquely bad about AI, it’s just a technology that can be used in a sensible way or not. The thing we need to be focusing on is how we structure our society to ensure that we’re not using technology in ways that’s harmful to us.

If it wasn’t LLMs then it would just be something else.

Again, I’m not down with inevitabilism arguments. May as well say the Joad’s house was going to get torn down somehow too.

If one believes nothing can or even should be done about destructive excesses of capitalism, where’s the leftism part even begin?

There’s nothing uniquely bad about AI

There actually is considering the jobs and consequent material conditions affected by it that were otherwise unaffected before its use. Just saying it’s all the same sounds like downright drilposting.

The thing we need to be focusing on is how we structure our society to ensure that we’re not using technology in ways that’s harmful to us.

No shit. Same deal with CFCs, high fructose corn syrup, partially hydrogenated soybean oil, and leaded gasoline. Saying “do nothing, it’s inevitable and no different than anything before and it can’t be helped” yet also “restructure society” is downright paradoxical to me here.

Well you brought up Jevons paradox here, which kind of is an inevitabilist argument. My view is simply that Jevons paradox is an observation of how capitalist system operates, and as long as this system of relations remains in place we will see problems with how technology is used.

If one believes nothing can or even should be done about destructive excesses of capitalism, where’s the leftism part even begin?

I think I was very clear that I think that destructive excesses of capitalism are precisely the problem here. What I continue to point out that, that’s a completely separate discussion from whether LLMs exist or not.

There actually is considering the jobs and consequent material conditions affected by it that were otherwise unaffected before its use. Just saying it’s all the same sounds like downright drilposting.

The jobs and consequent material conditions are affected by the capitalist system of relations and how it uses automation in ways that are hostile to workers. Automation itself is not the problem here.

No shit. Same deal with CFCs, high fructose corn syrup, partially hydrogenated soybean oil, and leaded gasoline. Saying “do nothing, it’s inevitable and no different than anything before and it can’t be helped” yet also “restructure society” is downright paradoxical to me here.

Nowhere did I say do nothing. What I actually said repeatedly is that you’re focusing on the wrong thing and that I don’t see technology itself as the problem.

In this case, I think we are going to see such improvements because there’s a direct benefit to companies operating LLMs to save costs.

I’m not so sure, not when a lot of venture capital money often rides on grandiose promises to dazzle investors (including vague promises of nuclear fusion payback from a startup in four years in Microsoft’s case)

There’s no putting toothpaste back in the tube at this point.

Considering the already present socioeconomic consequences of this unregulated technology, from career/reputation threatening deepfaking to further working class precarity, saying “nothing can be done” in response to such harm sounds like tech inevitabilism to me. Should the same be argued about the worsening surveillance state (which is also being boosted with this technology)? Would it have been worthwhile to say nothing could be done about, say, CFCs, high fructose corn syrup, partially hydrogenated soybean oil, or leaded gasoline? Saying “this product is doing bad things but oh well it’s already invented” is tiresome fatalism to me.

Again the issue here is with capitalism not with technology. I personally don’t see anything uniquely harmful that’s inherent in LLMs, and I think that it’s interesting technology that has a lot of legitimate uses. However, it’s clear to me that this tech will be used in horrible ways under our current economic system just like all other tech that’s used in horrible ways already.

I’m not being fatalistic at all, I just think you’re barking up the wrong tree here.

I already said my part in the other reply I just gave and it also answers most of this post.

I will emphasize my first post in this thread: yes, LLMs are tools, and tools can be useful. I’d rather not give any inevitabilist arguments to the tool as if it requires special laissez-faire privileges that we don’t grant to, say, internal combustion engines or nuclear power.

I agreed with the content of the essay.

Idk who chose the headline, cuz the author’s take is far more measured than that. (Probably an editor optimizing for clickbait?)

I would caution, though, that the author is specifically talking about:

- the creation of art

- the way AI is developed and deployed in our capitalist context

I think there are more valid concerns about AI beyond the scope of those two areas, but I can’t blame the author for focusing on their area of expertise.

Not only am I against AI, I fully endorse doing a real life Butlerian Jihad asap

I should have expected this outcome in this thread: