- cross-posted to:

- europe@feddit.org

- cross-posted to:

- europe@feddit.org

cross-posted from: https://sopuli.xyz/post/14914636

I am a fairly radical leftist and a pacifist and you wouldn’t believe the amount of hoo-ra military, toxic masculinity, explosions and people dying, gun-lover bullshit the YouTube algorithm has attempted to force down my throat in the past year. I block every single channel that they recommend yet I am still inundated.

Now, with shorts, it’s like they reset their whole algorithm entirely and put it into sensationalist overdrive to compete with TikTok.

I am a fairly radical leftist and a pacifist and you wouldn’t believe the amount of hoo-ra military, toxic masculinity, explosions and people dying, gun-lover bullshit the YouTube algorithm has attempted to force down my throat in the past year. I block every single channel that they recommend yet I am still inundated.

I really want to know why their algorithm varies so wildly from person to person, this isn’t the first time I’ve seen people say this about YT.

But in comparison, their algorithm seems to be fairly good in recommending what I’m actually interested in and none of all that other crap people always say. And when it does recommend something I’m not interested in, it’s usually something benign, like a video on knitting or something.

None of this out of nowhere far right BS gets pushed to me and a lot of it I can tell why it’s recommending me it.

For example my feed is starting to show some lawn care/landscaping videos and I know it’s likely related to the fact I was looking up videos on how to restring my weed trimmer.

I think it depends on the things you watch. For example, if you watch a lot of counter-apologetics targeted towards Christianity, YouTube will eventually try out sending you pro-Christian apologetics videos. Similarly, if you watch a lot of anti-Conservative commentary, YouTube will try sending you Conservative crap, because they’re adjacent and share that “Conservative” thread.

Additionally, if you click on those videos and add a negative comment, the algorithm just knows you engaged with it, and it will then flood your feed with more.

It doesn’t care what your core interests are, it just aims for increasing your engagement by any means necessary.

Maybe it depends on what you watch. I use Youtube for music (only things that I search for) and sometimes live streams of an owl nest or something like that.

If I stick to that, the recommendations are sort of OK. Usually stuff I watched before. Little to no clickbait or random topics.

I clicked on one reaction video to a song I listened to just to see what would happen. The recommendations turned into like 90% reaction videos, plus a bunch of topics I’ve never shown any interest in. U.S. politics, the death penalty in Japan, gaming, Brexit, some Christian hymns, and brand new videos on random topics.

Same here. I’ve never watched anything like that, yet my recommendations are filled with far-right grifters, interspersed with tech and cooking videos that are more my jam.

YouTube seems to think I love Farage, US gun nuts, US free speech (to be racist) people, anti-LGBT (especially T) channels.

I keep saying I’m not interested, yet they keep trying to convert me. Like fuck off YouTube, no I don’t want to see Jordan Peterson completely OWNS female liberal using FACTS and LOGIC

Don’t know about youtube, but I have a similar experience at twitter. I believe they probably see blocking, muting or reporting as “interaction” and show more of the same as a result.

On youtube on the other hand, I never blocked a channel and almost never see militaristic or right wing stuff (despite following some gun nerds, because I think they are funny).

Sane here. I will just skip over them. At most I will block a video I actually already watched on another platform like Patreon.

That does nothing for what’s recommended.

‘Not Interested’ -> 'Tell us why -> ‘I don’t like the video’ is what works.

I gave up and used an extension to remove video recommendations, blocked shorts and auto redirect me to my subscriptions list away from the home page. It’s a lot more pleasant to use now.

My shorts are full of cooking channels, “fun fact” spiky hair guy, Greenland lady, Michael from vsauce and aviation content. Solid 6.5/10

Shorts are pretty annoying in general, but I’ll be damned if they’re not great for presenting a recipe in a very short amount of time. Like, you don’t need to see the chef dicing four whole onions in real time.

I get all cooking, history, and movie reviews: Max Miller, Red Letter Media, Behind the Bastards, and four-hour history/archaeology documentaries

Same here. No matter how many you block, or mark not interested, it just doesn’t stop

The algorithm is just garbage at this point. I ultimately just watch YouTube exclusively through Invidious at this point, can’t imagine going back at this stage.

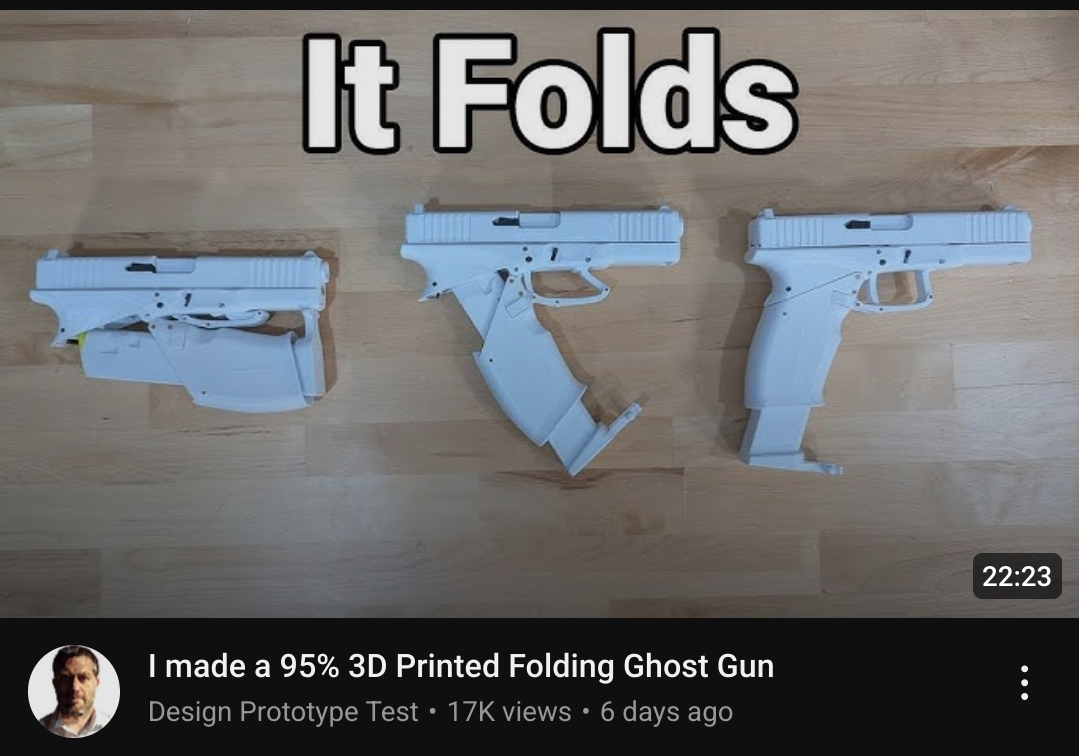

Saw crazy YouTube recommendations:

I totally agree with you! I identify as a ‘progressive liberal’ and had to click on ‘Don’t show me ad by this advertiser’ multiple times yet I was pushed ads by the far right on YouTube and other websites (Google Ads) until the day of the election. A lot of those ads involved hate speech, blatant lies, and were just full of propaganda. It’s a shame that these companies are able to force things down our throats and we’re not able to do much about it. And I’m someone who wouldn’t mind paying for something like ‘YouTube Premium’ to get rid of those ads but don’t do that out of principle because the thought of supporting a company that’s propagating all this disgusting stuff just doesn’t sit right with me.

It’s atleast good that my YouTube algorithm otherwise is dialed in that I don’t get video recommendations of the same disgusting things. Just the ads. And while people here might like to reply that ads also follow their own algorithm - the far right political parties in question had way too much money to blow to target the entire population than just a particular demographic(the right leaning or neutrals out there). On clicking ‘Why am I seeing this advert’ I was always met with ‘Your Location’ as the reason.

Dunno what you watch but yt may thinks the videos are about similar topics (even if you think otherwise).

For myself I usually get recommended what I already watch: Tech, vtuber/anime, mechanical engineering, oddities (like Weird Explorer, Technology Connections).

I rarely get stuff outside of the bubble like gun videos (some creator recently modified a glock in the design of milwaukee tools), meme channels etc.I highly suspect the videos you watch and interact heavily may feed back to yt in a different way than you think.

And remember: Negative feelings provoke interactions and increase the session time which is a plus for them.

Chinese company bytedance tries to fragilise western democracies episode 1937392

Chinesecompanybytedancetries to fragilisewesterndemocracies episode 1937392Logically the number should be higher than the previous comments (just sayin’).

No no, this is a parallel show running right after the one before.

American company meta tries to fragilise western democracies and personal freedoms episode 1937392

You know two things can be true, right?

Of course and they are. My point is people hate in tiktok all the time because it’s Chinese and because they harvest data while ignoring the fact Facebook/Instagram snapchat etc are all doing the same stuff and they don’t get met with a ban.

Because one is domestic and one is not. It’s pretty simple to understand. Geopolitics is still a thing.

One is domestic. Who stills sells that data to China 😂

I assure you I have hated Facebook long before TikTok

It’s not China actively manipulating things. It’s the algorithm that was getting gamed by the right wing. It’s not like the Cambridge Analytica scandal where Facebook was working directly with companies that were trying to get Trump elected. It’s more like the pipeline on YouTube, where it’s just algorithms funneling people into what’s popular ends up being gamed by conservative people. In the US, Tik Tok was known for helping the “woke left”, so obviously it’s not the same big conspiracy controlling both.

The thing is, it can also be used for good in that it can show people things like what’s happening in Palestine without being censored by the US, like other US controlled social media has been.

Did anyone actually read the whole article? These comments sorta read like the answer is no.

The researchers say that their findings prove no active collaboration between TikTok and far-right parties like the AfD but that the platform’s structure gives bad actors an opportunity to flourish. “TikTok’s built-in features such as the ‘Others Searched For’ suggestions provides a poorly moderated space where the far-right, especially the AfD, is able to take advantage,” Miazia Schüler, a researcher with AI Forensics, tells WIRED.

A better headline might have been “TikTok algorithm gamed by far-right AfD party in Germany”, but I doubt that would drive as many clicks.

For more info, check out this article: Germany’s AfD on TikTok: The political battle for the youth

It doesn’t matter if it is intentional or not, only the result matters: TikTok gave them boost in the visibility. Whether it was “an algorithm” or any other aspect of the company is irrelevant.

Also knowing how scummy these social media companies are and how they operate inside, there could be some internal memo to the moderators to let the AfD reign free (happened with Twitter and Libs of Tiktok).

I wouldn’t just lump all “social media” companies together.

In this example an important difference is that Twitter is a porn site run by fascists.

That LoTT stuff was happening under the previous ownership of Twitter too, and most higher ups do instruct their moderation team to not curb far-right extremism at the same rate.

Ideas spread between humans. On systems designed to facilitate communications between people, these ideas will likewise spread. Did AfD exploit TikTok’s algorithm or is right wing populism seeing a large growth worldwide?

When the printing press come out and certain news agencies starting “Yellow Journalism” were they exploiting that system of communication for profit?

Did JFK and Nixon exploit TV for their own political purposes?

I believe wholeheartedly that every social media algorithm should be open source and transparent so the public can analyze what funny business is going on under the hood.

But is it any different from how TV channels pick what shows to play or what ads to run? Which articles get printed and the choice of words for a newspaper?

I think people are quick to jump on TikTok because of some unusual socially acceptable jingoism but I don’t see how at its core is fundamentally different from other forms of media, let alone other popular social media platforms.

It definitely matters. There’s a huge difference between promoting nazis and writing an algorithm that’s gamed by nazis.

This headline is designed to mislead people by erasing that difference. And the motives of people who have been attacking tiktok are generally trash.

But being so big and influential as these platforms are, they should not have the option of “oops, sorry, didn’t think about that”. (I am aware I’m being idealistic)

We’ll have to agree to disagree. I prefer nuance to oversimplification.

2004: The Internet will lead to a utopian society without gatekeepers, without censorship, where we get our information from each other and primary sources, not media companies!

2024: The Internet is based on algorithmic attention. Those who control the algorithms control what parts of the immeasurably large pile of data will get attention and which not. Yet they are protected by the same intermediary liability laws as if they were traditional web forums, blog hosters, wikis with no personalized algorithm (for which those laws are very good and necessary).

https://lemmy.world/comment/11235801

Stop using this to push your agenda about censorship and whatever. Censorship has never lead to anything good. Read the history books.

Did you reply to the wrong comment? Where did I defend censorship?

They are confusing censorship with manipulation. The algorithm is working.

To be fair, the comment mentioned censorship but not manipulation.

I’m a bit confused where you made out it was about manipulation when it was really about the rage algorithms.

But obviously the commenter misread the comment completely and thought OP was advocating for censorship when in reality it was his description of Internet utopia.

The children will defend tiktok with their lives, crying about how it doesn’t matter non-allied foreign powers can manipulate the algos and narratives.

I don’t see this as a China problem. It’s a lack of regulations and oversight problem. Allied foreign powers (or one specific power to be precise) push far right via social media onto Europe as well.

He doesn’t see that specific allied power interfering with European politics because he is from the US.

It’s both.

The companies who do this shit absolutely need to be regulated way more aggressively.

TikTok (ByteDance), being a Chinese company based in the PRC, is compelled to operate in partnership with the CCP by law, which gives the CCP an insane degree of visibility and control into their systems. I would be absolutely unsurprised to find that the CCP is compelling them to tweak their algorithms and push specific content to specific audiences, in addition to the data gathering they’re surely engaged in. Source: I work for an oncology biotech, and we halted our Chinese efforts because there was apparently no legal way to square the circle with regard to data privacy/HIPAA considerations.

I’m neither a child nor defending tiktok but it doesn’t mater who manipulates it. No country," allied" or otherwise, should interfere in the democratic process of another country.

In my experience Tiktok isn’t pushing it, YouTube shorts however very much is. For me at least

𝕯𝖎𝖊𝖘𝖊 𝕶𝖔𝖒𝖒𝖊𝖓𝖙𝖆𝖗𝖘𝖊𝖐𝖙𝖎𝖔𝖓 𝖎𝖘𝖙 𝖓𝖚𝖓 𝕰𝖎𝖌𝖊𝖓𝖙𝖚𝖒 𝖉𝖊𝖗 𝕭𝖚𝖓𝖉𝖊𝖘𝖗𝖊𝖕𝖚𝖇𝖑𝖎𝖐 𝕯𝖊𝖚𝖙𝖘𝖈𝖍𝖑𝖆𝖓𝖉

Auf reddit hats besser funktioniert

tja

kann man nix machen…

Und da fragt man sich, ja woran es jelegen hat. ¯\_(ツ)_/¯

Bin ich

hä wieso

They push whoever uses more bots and pay better advertisement.

Da

Terrible headline.

god i can’t imagine getting politics on tiktok i thank goddess every day i’m well in the confines of booktok, piratetok, hazbintok, and cattok.

They maybe the only major party who talk what youth might want. Most other party almost ignore youth.

But then AFD basically reviving the “Hitlerjugend” (hitler youth), so that is that.

That is such a bullshit point. “The youth” doesn’t want one homogeneous thing. The youth is just as diverse in opinions as other cohorts, maybe even more so. It is also more likely to be on more radikal Sides of the political spectrum.

Also, is this another example of “90% of the people who voted AfD were men,” and then opposite them you’ll have the far left getting 90% women?

Not in this specific age group. Overall men are overrepresented, not by 90% but they are, but less so in younger people

Yes, they do not want one thing. But that is what they appeal to.

Talking to the emotion of teen, completely contradicting themself, just to get some popularity point. I think this is called popularism, but not sure.

Also afd is extremely radical, so that fit.

While I guess that’s true and it’s often surprising that the AfD is polling that well in the younger cohorts let’s not overstate their success. There are also a lot of people in that cohort very vehemently disagreeing with the AfD.

Not saying they successful. They just seem like only party that actually try to talk to teen on their level. Still complete bullshit though.

I hope other party start taking teen more seriously.

what youth might want

A pure aryan skull shape?

Oohhhhh noooooooooo The Youth aren’t getting what they Waaaaaant! Politics is bad!

Ooooh the Huge Manatee!!

WTF cares? Why the fk is the left so hell bent on censorship and controlling of all narratives?

“Why are leftists so concerned over foreign authoritarian governments directly influencing the elections in their countries???”

Unless there’s a clear violation of elections, like ballot stuffing and fraudulent votes by non-citizens and dead citizens… I could give a fk less. People have a right to speak their mind whether intelligent or psycho babbles.

You are either incredibly naive or speak in bad faith. Pushing propaganda to misled the public in elections is much more dangerous than stuffing a few ballots into the box.

That’s rich… let NBC and MSNBC know how you feel as a Dem/Progressive/Leftist etc…