This is tilting at windmills. If someone has physical possession of a piece of hardware, you should assume that it’s been compromised down to the silicon, no matter what clever tricks they’ve tried to stymie hackers with. Also, the analog hole will always exist. Just generate a deepfake and then take a picture of it.

You have it backwards. This is not too stop fake photos, despite the awful headline. It’s to attempt to provide a chain of custody and attestation. “I trust tom only takes real photos, and I can see this thing came from Tom”

And if the credentials get published to a suitable public timestamped database you can also say “we know this photo existed in this form at this specific time.” One of the examples mentioned in the article is the situation where that hospital got blown up in Gaza and Israel posted video of Hamas launching rockets to try to prove that Hamas did it, and the lack of a reliable timestamp on the video made it somewhat useless. If the video had been taken with something that published certificates within minutes of making it that would have settled the question.

That doesn’t really work. If the private key is leaked, you’re left in a quandary of “Well who knew the private key at this timestamp?” and it becomes a guessing game.

Especially in the scenario you posit. Nation-state actors with deep pockets in the middle of a war will find ways to bend hardware to their will. Blindly trusting a record just because it’s timestamped is foolish.

You’re right, it isn’t perfect so we shouldn’t bother trying. 🙄

In this case yes, because if it’s not perfect, then it’s perfectly useless

Couldn’t I just change the camera date?

We’re talking about a signature that’s published in a public database. The camera’s timestamp doesn’t matter, just the database’s.

If all that you’re interested in is the timestamp then you don’t even really need to have a signature at all - just the hash of the image is sufficient to prove when it was taken. The signature is only important if you care about trying to establish who took the picture, which in the case of this hospital explosion is not as important.

How is a hash of the image supposed to prove anything about when it was created?

You post it publicly somewhere that has a timestamp. A blockchain would be best because it can’t be tampered with.

Ah, I thought you were saying the hash proved something on its own. Lots of weird ideas about crypto in this thread.

That proves it existed at a specific time in the past, not that it didn’t exist before that. What’s stopping a hash of the Mona Lisa on a block chain with today’s date?

Maybe each camera has a different public/private key?

They would, but each camera’s private key can be extracted from the hardware if you’re motivated enough.

If Alice’s fancy new camera has the private key extracted by Eve without Alice’s knowledge, Eve can send Bob pictures that Bob would then believe are from Alice. If Bob finds out that Alice’s key was compromised, then he has to guess as to whether any photo he got from Alice was actually from Eve. Having a public timestamp for the picture doesn’t help Bob know anything, since Eve might’ve gone and created the timestamp herself without Alice’s knowledge.

Still, unique keys for each camera would lessen the risk of someone leaking a single code that undermines the whole system, as happened with DVDs.

And if an interested party wanted to steal a camera’s private key to fake an image’s provenance they’d need to get physical access to that very camera. Perhaps a state-sponsored group could contrive this (or intervene during manufacturing), but it is a challenge and an even bigger challenge for everyone else.

Physical access means all bets are off, but it’s not required for these attacks. If it’s got a way to communicate with the outside world, it can get hacked remotely. For example here’s an attack that silently took over iphones without the user doing anything. That was used for real to spy on many people, and Apple is pretty good at security. Most devices you own such as cameras with wifi will likely be far worse security-wise.

And Tom’s camera gets hacked by an evil maid and then where are you? Exactly. This is snake oil.

Unless the evil maid is also capable of time travel there’s no way for them to mess with the timestamps of things once they’ve been published. She could take some pictures with the camera but not tamper with ones that have already been taken.

The evil maid could take a copy of a legitimate image, modify it, publish it, and say that the original image was faked. If there’s a public timestamp of the original image, just say “Oh, hackers published it before I could, but this one is definitely the original”. The map is not the territory, and the blockchain is not what actually happened.

Digital signatures and public signatures via blockchain solve nothing here.

The evil maid could take a copy of a legitimate image, modify it, publish it, and say that the original image was faked.

No she could not, the original image’s timestamp has already been published. The evil maid has no access to the published data.

“Oh, hackers published it before I could, but this one is definitely the original”

And then the evil maid is promptly laughed out of the building by everyone who actually understands how this works. Your evil maid is depending on “trust me, bro” whereas the whole point of this technology is to remove the need for that trust.

original image’s timestamp has already been published

“Oh the incorrect information was published, here’s the correct info”. Again, the map is not the territory.

the whole point of this technology is to remove the need for that trust.

And it utterly fails to achieve that here. I’ll put it another way: You have this fancy camera. You get detained by the feds for some reason. While you’re detained, they extract your private keys and publish a doctored image, purportedly from your camera. The image is used as evidence to jail you. The digital signature is valid and the public timestamp is verifiable. You later leave jail and sue to get your camera back. You then publish the original image from your camera that proves you shouldn’t have been jailed. The digital signature is valid and the public timestamp is verifiable. None of that matters, because you’re going to say “trust me, bro”. Introducing public signatures via the blockchain has accomplished absolutely nothing.

You’re trying to apply blockchain inappropriately. The one thing that publishing like this does is prove that someone knew something at that time. You can’t prove that only that person knew something. You can prove that someone had a private key at time X, but you cannot prove that nobody else had it. You can prove that someone had an image with a valid digital signature at time X, but you cannot prove that it is the unaltered original.

“Oh the incorrect information was published, here’s the correct info”. Again, the map is not the territory.

And again, your “attack” relies on the evil maid saying “just trust me bro” and people taking her word on that. The “incorrect information” is provably published before the supposed “correct information” was.

The whole point of building this stuff into the camera is so that the timestamp can be published immediately. Snap the photo and within seconds the timestamp is out there. If the photographer doesn’t have that enabled then he’s not actually using the system as designed, so he shouldn’t be surprised if it doesn’t work right. If he uses it as designed then it will work.

The one thing that publishing like this does is prove that someone knew something at that time. You can’t prove that only that person knew something.

So? That’s not the goal here.

If only I knew how to create my own firmware for Leica… then I could call the same crypto-chip and sign any picture I’d like. (Oh wait! There’s a github for hacking Leica M8 firmware!)

Ah, DRM for your photos.

Great.

Not at all. From what I understand of this article, it wouldn’t stop you from doing anything you wanted with the image. It just generates a signed certificate at the moment the picture is taken that authenticates that that particular image existed at that particular time. You can copy the image if you like.

Forgive the cynicism, but: free, for now.

What happens when the company decides all of a sudden to lock the service behind a subscription pay wall?

Do you still maintain rights to your photos when you use this service?

I have no idea what you’re proposing be “locked behind a subscription pay wall.” The certificate exists and is public from the moment the picture is taken. It can be validated by anyone from that point forward, otherwise it would be pointless. Post the timestamp and the public key on a public blockchain and there’s nothing that can be “taken away” after that.

Your rights to your photos are from your copyright on them. This service shouldn’t affect that. Read the EULA and don’t sign your rights away and there’s no way they can be taken.

I suppose if they are running some kind of identity-verification service they could cut you off from that and prevent future photos you take from being signed after that, but that doesn’t change the past.

What happens is the signature attached to the photo becomes impossible to maintain when the photo is edited, but the photos themselves are no different from any other photo. In other words, just a return to the status quo.

This is an adorable show of optimism.

🙄

Digital signatures are not nefarious. Quit freaking out about things just because you don’t understand them.

It’s how this works.

This isn’t DRM. I can’t believe you have so many upvotes for such blatant FUD.

Welcome to

RedditLemmy, where everyone just reads the title and jumps to conclusions based on that

I think this is probably great for specific forensic work and similar but the problem with deepfakes isn’t that people can’t determine their veracity. The problem is that people see a picture online and don’t bother to even check. We have news sources that care about being accurate and trustworthy yet people just choose to ignore them and believe what they want.

“that it’s a true representation of what someone saw.”

Someone please correct me if I’m wrong but photography has never ever ever been a “true” representation of what you took a picture of.

Photography is right up there with statistics in its potential for “true” information to be used to draw misleading or false conclusions. I predict that a picture with this technology may carry along with it the authority to impose a reality that’s actually not true by pointing to this built-in encryption to say “see? the picture is real” when the deception was actually carried out by the framing or timing of the picture, as has been done often throughout history.

You’re talking about “the whole truth”. If the whole is true, then all of the parts are true, so photographing only a subset of the truth (framing) is still true. If a series of events are true, then each event is true, so taking a picture at a certain time (timing) is also true.

Photos capture real photons that were present at real scenes and turn them into grids of pixels. Real photographs are all “true”. Photoshop and AI don’t need photons and can generate pixels from nothing.

That’s what is being said.

Nah, lying by omission can still tell a totally wrong narrative. Sometimes it has to be the whole truth to be the truth.

You’d make a bad programmer or mathematician.

Well… Mathematicians would agree with me

Nope

As I understand it, it’s a digital signature scheme where the raw image is signed at the camera, and modifications in compliant software are signed as well. So it’s not so much “this picture is 100% real, no backsies”. Nor is it “We know all the things done to this picture”, as I doubt people who modify these photos want us to know what they are modifying.

So it’s more like “This picture has been modified, like all pictures are, but we can prove how many times it was touched, and who touched it”. They might even be able to prove when all that stuff happened.

Even that doesn’t do much to prove the image is an authentic representation of anything. People have been staging photos for as long as there have been photos, and no camera can guard against that.

So basically I would just have to screenshot the image or export it to a new file type that doesn’t support their fancy encryption and then I can do whatever I want with the photo?

The point is that they can show anybody interested the original with the signature from the camera.

The problem is that you can likely attack the camera’s security chip to sign any photo, as internally the photo would come from the cmos without any signing and the camera would sign it before writing it to storage.

Just like stealing an NFT.

You wouldn’t download a car

i would probably download a cock and suck it

It’s signed, not encrypted. Think of it as a chain of custody mark. The original photo was signed by person X, and then edited by news source Y. The validity of that chain can be verified, and the reliability judged based on that.

Effectively it ties the veracity and accuracy of the photo to a few given parties. E.g. a photo from a known good war photographer, edited under the “New Your Times” newspaper’s licence would carry a lot more weight than a random unsigned photo found online, or one published by a random online rag print.

You can break the chain, but not fake the chain.

Everyone talking about hacking the firmware to extract the private key

Me just taking a photo of the deepfake

Maybe I am misunderstanding here, but what is going to stop anyone from just editing the photo anyway? There will still be a valid certificate attached. You can change the metadata to match the cert details. So… ??

I don’t know about this specific product but in general a digital signature is generated based on the content being signed, so any change to the content will make the signature invalid. It’s the whole point of using a signature.

I was too tired to investigate further last night. That is the case here, sections of data are hashed and used to create the certs:

https://c2pa.org/specifications/specifications/1.3/specs/C2PA_Specification.html#_hard_bindings

Which means that there isn’t a way to edit the photo and have the cert match, and also no way to compress or change the file encoding without invalidating the cert.

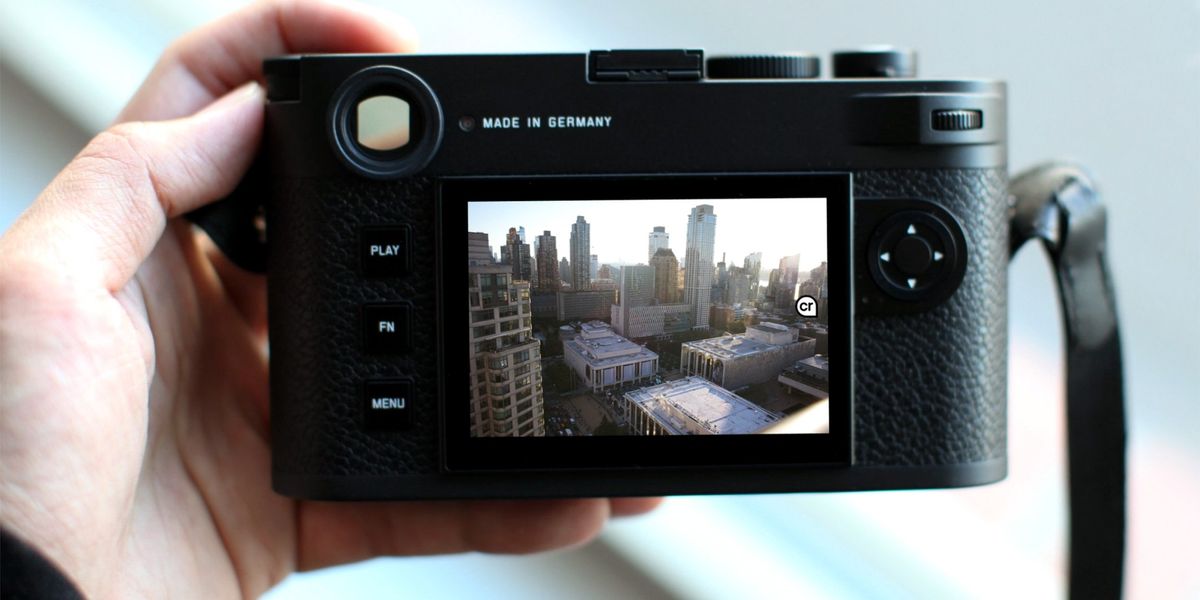

so it’s for jpeg shooters, basically. unfortunately the leica bodies aren’t really known for producing good jpegs.

I’m not expert in encryption, but I think you could store a key in the device that encrypts the hash, then that encrypted hash is verified by Leica servers?

Ctrl + F “Blockchain”

… Oh?

Well that’s a suprise, a system that actually is comperable to block chain in a different medium doesn’t plaster it everywhere. We’ve certainly seen more use over much much less relevance.

Neat tech. Hope it catches on.

And where do you see any resemblance to a blockchain?

From the article it is just cryptographic signing - once by the camera with its built-in key and once on changes by the CAI tool which has its own key.

Great article/paper on why this isn’t a good idea: https://www.hackerfactor.com/blog/index.php?/archives/1010-C2PAs-Butterfly-Effect.html

That was a great read. I love take downs.

Informacam has a similar “chain of custody” goal but was developed for existing devices. Guardian Project was involved with CameraV, the android version for mobile devices. It looks like Proofmode is now the active project & it’s available for ios as well as android. https://proofmode.org/

Damn $9,000?

It is a Leica.

Yeah. In eurotrip a dork got a BJ just for owning a Leica.

I was wondering when crypto content would become a thing like this.

It’s one of the most obvious uses for it, I’ve suggested this sort of thing many times in threads where people demand “name one actually practical use for blockchains.” Of course so many people have a fundamental hatred of all things blockchain at this point that it’s probably best not to advertise it now. Just say what it can do for you and leave the details in the documentation for people to dig for if they really want to know.

This is cool and all. But I am more concerned about finding a way to prevent my images from being scraped for AI training.

Something like an imperceptible gray grid over the image that would throw off the AI training, and not force people to use certain browsers / apps.

This is awesome, thanks for sharing!

After reading I think it perceptively alters the image, but I’m definitely going to play around with it and see what’s possible.

Beware, this is made by Ben Zhao, the University of Chicago professor who stole open source code for his last data poisoning scheme.