Episode premise:

Kivas Fajo is determined to add the unique Data to his prized collection of one-of-a-kind artefacts and, staging Data’s apparent death, he imprisons him aboard his ship.

We know that Data is later logically coerced to lie in “Clues” to protect the crew, but this appears to be a decision all his own. Or did he not in fact actually fire the weapon?

Contrary to popular belief, there is nothing in Data’s programing that would prevent him from doing “bad” things like lying or killing. He has free will just as much as any other Starfleet officer.

Data is much more human than you might guess at first. He is more akin to a human on the autism spectrum than a robot with hard-coded programming.

Absolutely. Rewatching the series in full as an adult made it more apparent that Data was always closer to his goal than he could comprehend. Just had trouble adjusting to social “norms” more than others.

Yup exactly. He just lacked emotional subroutines (at first) and the hardware to process that. But he doesn’t need emotions to kill. He is in fact capable of using lethal force (First Contact), he just has an ethical subroutine that prevents killing (Descent I, II) unless in defense of others, himself, or The Federation. Which would fall under his logical subroutines.

Similar in a way to Chief Engineer Hemmer who will not use violence (Memento Mori) unless in an act of preserving life. The means to defend is part of the training of a Starfleet officer.

This is honestly something that kind of annoys me about the show, data is pretty obviously human enough from the get-go, his journey is just about generally figuring things out and forming a proper personality.

Like the episode where they have to put his personhood to trial isn’t that amazing, humans now overwhelmingly at least somewhat care about the well-being of actual cattle, how the fuck would a clearly human-looking android that’s clearly capable of reasoning not be considered a person just like any other humanoid alien species?

It would have made sense if it took place in humanity’s capitalist past, but the largely enlightened federation? come oooooooon

i like that The Orville has their obligatory digital lifeform be from a whole-ass race that considers themselves obviously superior to everyone else, and no one questions their personhood because how the fuck do you question the personhood of someone who is actively choosing not to pulverize you?

Data: “Perhaps something occurred during transport, Commander”

Riker: “Like what?”

Data: “Like I tried to shoot the motherfucker but you beamed me away too quick.”

O’brien: “removed be crazy…”

“Deactivating it” meant he stored it in another pattern buffer for future use. Could always add it to a troublesome person’s transporter beam…

Did he lie? Or did he give a vague statement that is necessarily true?

Data is an Aes Sedai confirmed.

WoT references! a rare treasure from a long lost age (which may hopefully come again…)

The only community I truly miss from reddit is wetlander humor.

There is a wetlander humor but it is very low activity

Sorry, should have said an active wetlander humor community. I’m actually a subscriber on that community.

The question is what Ajah he would end up in. The Whites, Grays, and Browns would all want him, hut he might be a Blue at heart

O’Brien deactivated the weapon, so something did happen during transport.

Yes. Perhaps it did.

Data:

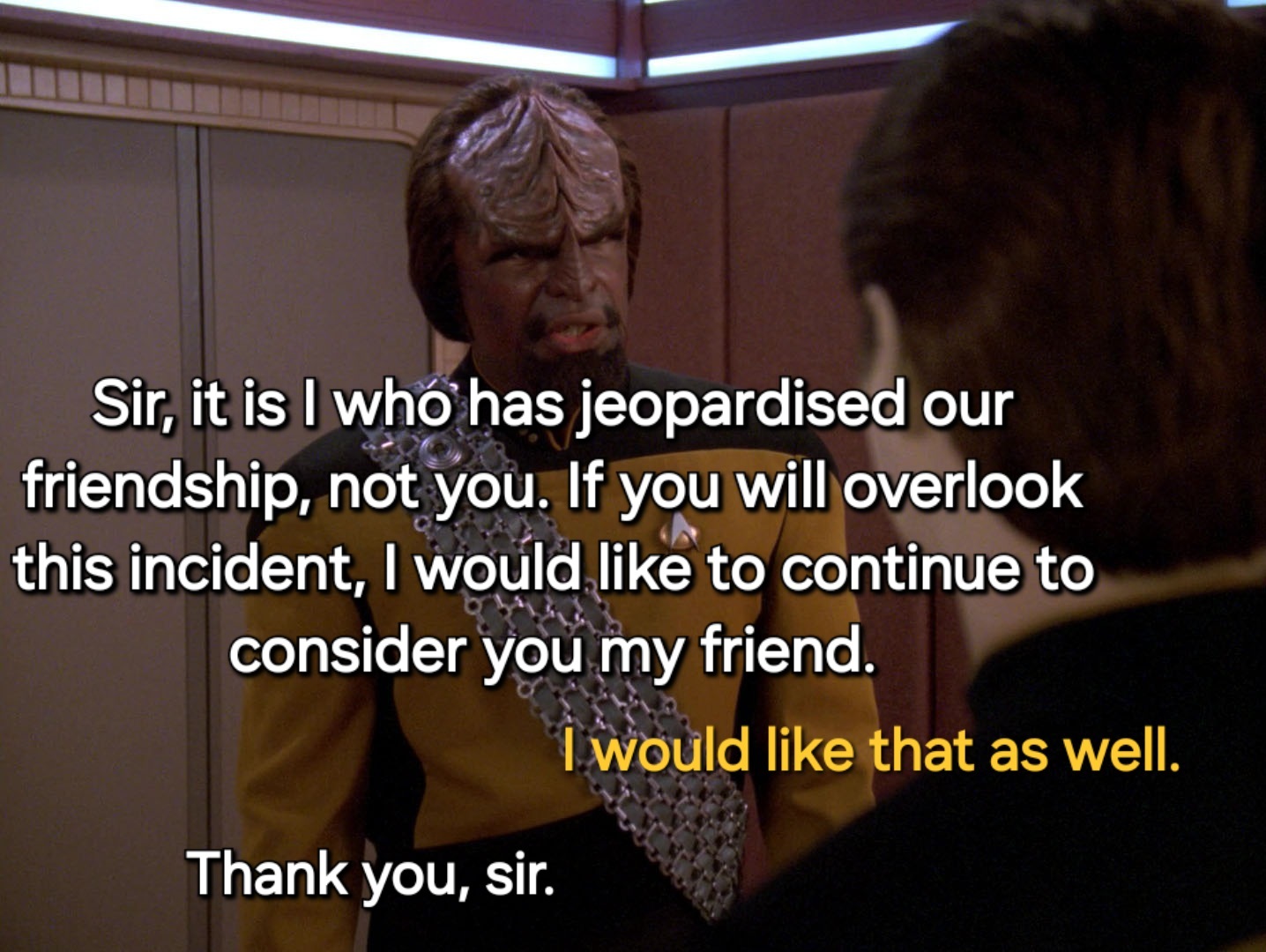

Reminded me of one of the best exchanges between Data and Worf from Gambit Part 2:

And worf has no idea …

Over my head like a 747. Can you explain for my smooth brain what I missed here?

Data agreed with Worf that Worf wanted to continue to be his friend. It is only implied that data wants to be Worf’s friend. Kinda brutal.

The idea that Data completely lacks emotion was always hollow to me. People don’t especially understand what emotions are or what it means to feel them. I think we lie to ourselves quite a lot that our decisions are “purely rational” even though everything in our environments influences those decisions.

Who hasn’t made a bad call because they were: tired, hungry, over heated, angry, or otherwise being affected by emotions? Is hunger an emotion? When Data decides that he will practice music today, is that part of an elaborate schedule he has planned years in advance, or is that what he “felt like” doing that day? Perhaps a long string of logic could explain why today is a good day to Vi-olen, but how is that different from the rationale I could put together for why I made a decision?

So what I’m saying is: I blame the writers! I think by season 3 they’d explored a little of the possibilities with Data about what humanity is and what it means to work with an android, but I don’t know that they ever really got a handle on what it would look like for a being of pure reason to emergently develop emotions.

Look at chatgpt and how readily it convinces people that there is a thinking being in there. When an LLM says “I’m happy to see you today, what can I do for you?” do we take that as a canned response with no real feeling behind it, or do we assume that because it can say it is happy, that it must be feeling happy?

Do we get much perspective on Data’s interiority? Perhaps he experiences a world of emotions we can’t even comprehend but has no understanding of how to express these things? His art work is called out as being soulless and copy-cat at various times. But also Data has a cat, and a daughter, and many friends. He tells bad jokes. It seems like there’s some kind of feelings going on in there, even if it comes out in his actions and not in his art.

This is actually why I kinda liked Pulaski. When she first meets him, she calls bullshit on the idea that he has no emotions. She mispronounces his name, and when Data corrects her, she immediately hones in on that; why should you have a preference if you don’t feel anything about it? But people keep telling her that he doesn’t have emotions, so she’s basically like, “Alright, fine, I’ll treat him like a calculator.” And honestly, why wouldn’t you? If he doesn’t have emotions, then why bother with pleasantries? It’s not like he’s going to get offended. She eventually does come around to him, but she does that by basically coming to the conclusion that he does care, given his actions during that episode with the children and the aging disease (I don’t remember the name and I’m too lazy to look it up).

It was always very clear to me that Data had emotions. How could he not? He has desires, wants, preferences…you can’t have those things without feeling something. It just seems like they’re very distant, numb feelings, rather than strong sensations. And it kinda makes sense to build him that way; Lore was created with much more advanced emotions, and he’s a little psychotic. It makes more sense to have his feelings be slightly out of reach and let them grow with his positronic brain, so he can learn to handle them over time.

I never liked the, “emotion chip,” solution to Data’s feelings. It seems like they never explored his emotional development because they didn’t want to make any status quo changes on a mostly episode-of-the-week show. Then they created an emotion McGuffin they never intended to use and said, “fuck it, let’s use it for the movie!” But in the end, I believe we were always meant to think the same thing about Data as we were about Spock: “I know this guy says he doesn’t have emotions, but I think he’s full of shit.”

Basically the same thing with Vulcans. Vulcans show their emotions all the time, it’s just almost always mild irritation and impatience.

In the end, human emotions are motivators. They decide our actions. If we’re happy with something, we continue doing it, if it hurts, we stop it. Data clearly has a system in place that motivates him to do things.

These might be emotions, like you say, but this is not the only possibility. Can we really imagine what a motivator would be that is not an emotion? I don’t think so. But I think this is what Data has. It’s not emotions like we experience them, but it’s something that causes him to do things/stop doing things. Now these motivators are just that, and thus act very similarly to our emotions. You might very well think he has emotions, but he hasn’t, he has something else.

Everything makes sense if you think of it like this. Data behaves almost human, but not quite. The emotion chip actually has a huge effect. Lore is completely different. This all makes sense when you think of emotions as an actually new thing for Data, but something else still being there.

He didn’t lie; he didn’t answer the question.

Riker didn’t actually ask a question, he just made a statement.

We know he’s figured out bloodlust by Generations.

Anyone who thinks he didn’t fire with the intention to kill Fajo needs to go back to English class and learn how to read some basic literature. It’s like the end of the Sopranos. People’s wishes for happy endings and perfect Hollywood stories blind then to the work the writers went through to tell you (rather obviously, there isn’t much room for debate among people who know how to interpret stories) that yes, Data can kill an unarmed man in the right circumstances, or yes, Tony Soprano’s brains are splattered all over his family. It’s not a happier story but it’s a better one with actual meaning and has a more lasting impact.

I believe that Data has it in him to make that decision, I’m mostly calling out the ambiguity of the scene as it played out. And yeah, Tony met a gruesome but earned end.

It’s like the end of the Sopranos.

They ran out of film?

Lets face it, data was gonna cap him. The bad dude had killed someone just before.

Nope. Let’s break it down.

“Perhaps something occurred during transport.”

The opening word, “perhaps,” instills ambiguity in whatever hypothesis follows it.

The next clause, “something occurred” is objectively true. The weapon was dematerialized, deactivated, and then rematerialized. That sequence of events is accurately describable as “something occurred,” regardless of whether or not Data deliberately activated the firing mechanism of the weapon, or malfunction during transit, or something else happened to cause the gun to discharge.

“During transport” cannot be false, since we do not see any weapon discharge before or during Data’s dematerialization.

Taken altogether, this sentence is true and therefore Data would be same to say it without lying.

Truth is a funny thing, and carefully selecting weird can allow someone to be deceptive or evasive by starting an absolute truth. And that’s not even factoring in the subjectivity of truth, or any faults in the memory - synthetic, organic, or otherwise - of the individual. Objectivity can be frustratingly difficult to pin down.

Data’s the science officer. He could probably build a phaser from scrap blindfolded. Him saying maybe “something” happened during transport is clearly a deflection. I bet he thought about this moment when he discovered Lore and all Lore had done.

Why did Fajo believe data couldn’t kill? We see data blasting baddies all the time

We see data blasting baddies all the time

Omg literally perfect