There’s an extraordinary amount of hype around “AI” right now, perhaps even greater than in past cycles, where we’ve seen an AI bubble about once per decade. This time, the focus is on generative systems, particularly LLMs and other tools designed to generate plausible outputs that either make people feel like the response is correct, or where the response is sufficient to fill in for domains where correctness doesn’t matter.

But we can tell the traditional tech industry (the handful of giant tech companies, along with startups backed by the handful of most powerful venture capital firms) is in the midst of building another “Web3”-style froth bubble because they’ve again abandoned one of the core values of actual technology-based advancement: reason.

The article makes several claims and insinuations without backing them up so I find it hard to follow any of the reasoning.

I don’t think it’s desirable that it’s easier to reason about an AI than about a human. If it is, then we haven’t achieved human-level intelligence. I posit that human intelligence can be reasoned about given enough understanding but we’re not there yet, and until we are we shouldn’t expect to be able to reason about AI either. If we could, it’s just a sign that the AI is not advanced enough to fulfill its purpose.

Postel’s law IMHO is a big mistake - it’s what gave us Internet Explorer and arbitrary unpredictable interpretation of HTML, leading to decades of browser incompatibility problems. But the law is not even applicable here. Unlike the Internet, we want the AI to appear to think for itself rather than being predictable.

“Today’s highly-hyped generative AI systems (most famously OpenAI) are designed to generate bullshit by design.” Uh no? They’re designed with the goal to generate useful content. The bullshit is just an unfortunate side effect because today’s AI algorithms have not evolved very far yet.

If I had to summarize this article in one word, that would be it: bullshit.

I agree that the author didn’t do a great job explaining, but they are right about a few things.

Primarily, LLMs are not truth machines. That just flatly and plainly not what they are. No researcher, not even OpenAI makes such a claim.

The problem is the public perception that they are. Or that they almost are. Because a lot of time, they’re right. They might even be right more frequently than some people’s dumber friends. And even when they’re wrong, they sound right. Even when it’s wrong, it still sounds smarter than most peoples smartest friends.

So, I think that the point is that there is a perception gap between what LLMs are, and what people THINK that they are.

As long as the perception is more optimistic than the reality, a bubble of some kind will exist. But just because there is a “reckoning” somewhere in the future doesn’t imply it will crash to nothing. It just means the investment will align more closely to realistic expectations as the clarity of what realistic expectations even are become more clear.

LLMs are going to revolutionize and also destroy many industries. It will absolutely fundamentally change the way we interact with technology. No doubt…but for applications which strictly demand correctness, they are not appropriate tools. And investors don’t really understand that yet.

OpenAI’s algorithm like all LLM’s is designed to give you the next most likely word in a sentence based on what most frequently came next in its training data. Their main strategy has actually been to use a older and simpler transformer algorithm, and to just vastly increase the scrapped text content and recently bias with each new release.

I would argue that any system that works by stringing sudorandom words together based on how often they appear in its input sources is not going to be able to do anything but generate bullshit, albeit bullshit that may happen to be correct by pure accident when it’s near directly quoting said input sources.

The article makes several claims and insinuations without backing them up so I find it hard to follow any of the reasoning.

“Article”. I’m going to call it what it is: a blog post that should have moderated away. If people here are going to post “tech news”, make sure it has actual journalism.

Postel’s law IMHO is a big mistake - it’s what gave us Internet Explorer and arbitrary unpredictable interpretation of HTML, leading to decades of browser incompatibility problems. But the law is not even applicable here. Unlike the Internet, we want the AI to appear to think for itself rather than being predictable.

It’s almost like Isaac Asimov wrote a famous book about robotic laws and a bunch of different short stories on how easy it was to circumvent them.

Today’s AI is way worst then when ChatGPT was first released… it is way too censored.

But either way, I never considered LLMs to be A.I. even if they have the possibility to be great.

Today’s AI is way worst then when ChatGPT was first released… it is way to censored.

You might need to reconsider that position. There are plenty of uncensored models available, that you can run on your local machine, that match or beat GPT-3 and beat the everliving shit out of GPT-2 and other older models. Just running them locally would have been unthinkable when GPT-3 released, let alone on CPU at reasonable speed. The fact that open source models do so well on such meager resources is pretty astounding.

I agree that it’s not AGI though. There might be some “sparks” of AGI in there (as some researchers probably put it), but I don’t think there’s much evidence of self-awareness yet.

which one is your favorite one? I might buy some hardware to be able to run them soon (I only have a laptop right now that is not the greatest, but I am willing to upgrade)

You might not even need to upgrade. I personally use GPT4All and like it for the simplicity. What is your laptop spec like? There are models than can run on a Raspberry Pi (slowly, of course 😅) so you should be able to find something that’ll work with what you’ve got.

I hate to link the orange site, but this tutorial is comprehensive and educational: https://www.reddit.com/r/LocalLLaMA/comments/16y95hk/a_starter_guide_for_playing_with_your_own_local_ai/

The author recommends KoboldCPP for older machines: https://github.com/LostRuins/koboldcpp/wiki#quick-start

I haven’t used that myself because I can run OpenOrca and Mistral 7B models pretty comfortably on my GPU, but it seems like a fine place to start! Nothing stopping you from downloading other models as well, to compare performance. TheBloke on Huggingface is a great resource for finding new models. The Reddit guide will help you figure out which models are most likely to work on your hardware, but if you’re not sure of something just ask 😊 Can’t guarantee a quick response though, took me five years to respond to a YouTube comment once…

thanks a lot man, I will look into it but I have on-board gpu… not a big deal if I need to upgrade (I spend more on hookers and blow weekly)

It’s ok if you don’t have a discrete GPU, as long as you have at least 4GB of RAM you should be able to run some models.

I can’t comment on your other activities, but I guess you could maybe find some efficiencies if you buy the blow in bulk to get wholesale discounts and then pay the hookers in blow. Let’s spreadsheet that later.

AGI

It depresses me that we have to find new silly acronyms to mean something we already had acronyms for in the first place, just because we are simply too stupid to use our vocabulary appropriately.

AI is what “AGI” means. Just fucking AI. It has been for more than half a century, it is sensical, and it is logical.

However, in spite of its name, the current technology is not really capable of generating information, so it isn’t capable of actual “intelligence”. It is pseudo-generation, which it achieves by sequencing and combining input (AKA training) data. So it does not generate new information, but rather new variations of existing information. Due to this fact, I would prefer the name of “Artificial Adaptability” (or “AA”, or " A2") to be used in lieu of “AI”, or “Artificial Intelligence” (on the grounds that it means something else entirely).

Edit: to the people it may concern: stop answering this about “Artifishual GeNeRaL intelligence”. I know what AGI means. It takes all of 3 seconds to do an internet search, and it isn’t even necessary: everyone has known for months. I did not bother to explicit it, because I did not imagine that anyone would be simple enough to take literally the first word starting with “g” from my comment and roll with that in a self-important diatribe on what they imagined I was wrong about. So if you feel the need to project what you imagine I meant, and then correct that, please don’t. I’m sad enough already that humanity is failing, I do not need more evidence.

Edit 2: “your opinion only matters if you have published papers”. No. Also it is a really stupid argument from authority. Besides, anyone with enough time on their hands can get papers published. It is not a guarantee of quality, but merely a proof that you LARPed in academy. The hard part isn’t writing, it is thinking. And as I wrote before, I already know this, I need no more proof, thank you.

The fact that you think “AGI” is a new term, or the fact that you think the “G” stands for “Generative” shows how much you know about the field, so maybe you should go read up on literally any of it before you come at me with this attitude and your “due to this fact” pseudo-intellectual bullshit.

The “G” stands for “General”, friend. It delineates between an Artificial Intelligence that is narrow in the scope of its knowledge, from intelligences like us that can adapt to new tasks and improve themselves. We do not have Artificial General Intelligence yet, but the ones we have getting there and faster than you could possibly imagine.

Tell me, oh Doctor Of Neuropsychology and Computer Science: how do people learn? How do people generate new information?

Actually no fuck that, I have a better question: define “intelligence”. Let’s hear it, I’ve wanted to act the Picard in a Data trial since I was a kid. Since around the time that the term Artificial General Intelligence was coined in fact: nineteen ninety fucking seven.

the fact that you think the “G” stands for “Generative”

You’ve shown your IQ right there. No time to waste with you. Goodbye.

If you haven’t published a few papers then your preference in acronyms is irrelevant.

AI comprises everything from pattern recognition like OCR and speech recognition to the complex transformers we know now. All of these are specialized in that they can only accomplish a single task. Such as recognizing graffiti or generating graffiti. AGI, artificial general intelligence, would be flexible enough to do all the things and is currently considered the holy grail of ai.

But either way, I never considered LLMs to be A.I. even if they have the possibility to be great.

It doesn’t matter what you consider, they are absolutely a form of AI. In both definition and practice.

tell me how… they are dumb as fuck and follow a stupid algo… the data make them somewhat smart, that’s it. They don’t learn anything by themselves… I could do that with a few queries and a database.

You could do that with a few queries and a database lol. How do you think LLMs work? It seems you don’t know very much about them.

deleted by creator

deleted by creator

they are dumb as fuck

This isn’t an argument in the way you think it is. Something being “dumb” doesn’t exclude it from possessing intelligence. My most metrics toddlers are “dumb” but no one would ever suggest in seriousness that any person lacks intelligence in the literal sense. And having low intelligence is not the same as lacking it.

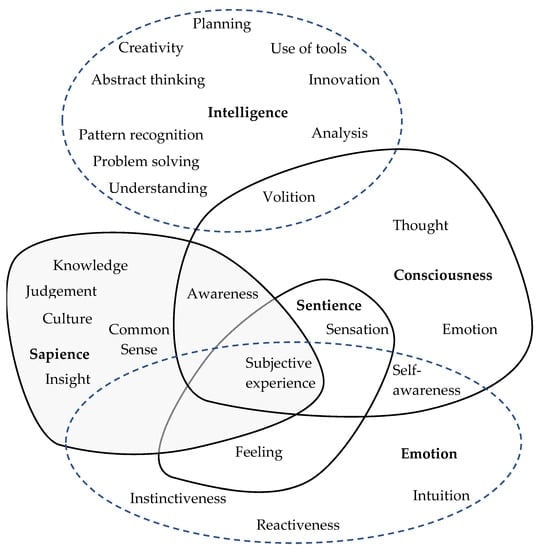

Can you even define intelligence? I would honestly hazard a guess that by “intelligence” you really mean sapience. The discussion of what is intelligence, sapience, or sentience is far more

than you’d expect.

than you’d expect.follow a stupid algo

Our brains literally run on an algorithm.

the data make them somewhat smart, that’s it

And where’s the intelligence in people without the data we learn?

They don’t learn anything by themselves

I don’t know what you even mean by this. Everything learns with external input.

I could do that with a few queries and a database.

The hell you could! This statement demonstrates you have absolutely no clue what you’re talking about. LLMs learn and process information in a method extremely close to how biological neurons function. We’re just using digital computation instead of analogue (the way all biology works).

LLMs have regularly demonstrated genuine creativity and even some emergent properties. They are able to learn certain “concepts” (I put concepts in quotes, because that’s not the right word here) that we as humans intrinsically know. Things like “a knight in armour” are likely to refer to a man, because historically it was entirely men that became knights, outside of a few recorded instances.

It can also learn general distances between cities/locations based on the text itself. Like New York city and Houston being closer to each other than Paris.

No, you 100% absolutely in no way ever could do the same thing with a database and a few queries.

*too

sorry for all my spelling mistakes… I fixed a few. I think everyone should spee(a)k binary anyways.

I’m sorry I’m a spelling spaz.

Anything we’ve had before now wasn’t AI. It was advanced switch statements. This is the first time to my knowledge that we have a working mesh based AI available to the public. This is not a hype bubble, it is revolutionary and will impact 80% of all jobs on the planet over the next 10 years.

Anything we’ve had before now wasn’t AI.

This claim doesn’t work simply due to the fact AI is a very vague term which nobody agrees on. The broadest and most literal (and possibly oldest) definition is simply any inorganic emulation of intelligence. This includes if statements and even purely mechanical devices. The narrowest definition is a computer with human-like intelligence, which is why some people claim LLMs are not AI.

Saying LLMs work differently from older AI approaches is fair, saying older approaches are not AI but the latest one is is questionable.

Yeah, I have to disagree. Reason-able-ness is extremely important. It allows us to compose various pieces of logic, which is why I do think, it will always be more important than the non-reason-able 95% accurate solutions.

But that fundamental flaw does not mean, the non-reason-able parts may not exist at all. They simply have to exist at the boundaries of your logic.

They can be used to gather input, which will then be passed into your reason-able logic. If the 95% accurate solution fucks up, that means the whole system is 95% accurate, but otherwise, it doesn’t affect your ability to reason about the rest.

Well, and they can be used to format your output. Whether that’s human-readable text or an image or something else. Again, if they’re 95% accurate, your whole system is 95% accurate, but you can still reason about the reason-able parts.

It’s not exactly different from just traditional input & output, especially if a human is involved in those.