See THIS POST

Notice- the 2,000 upvotes?

https://gist.github.com/XtremeOwnageDotCom/19422927a5225228c53517652847a76b

It’s mostly bot traffic.

Important Note

The OP of that post did admit, to purposely using bots for that demonstration.

I am not making this post, specifically for that post. Rather- we need to collectively organize, and find a method.

Defederation is a nuke from orbit approach, which WILL cause more harm then good, over the long run.

Having admins proactively monitor their content and communities helps- as does enabling new user approvals, captchas, email verification, etc. But, this does not solve the problem.

The REAL problem

But, the real problem- The fediverse is so open, there is NOTHING stopping dedicated bot owners and spammers from…

- Creating new instances for hosting bots, and then federating with other servers. (Everything can be fully automated to completely spin up a new instance, in UNDER 15 seconds)

- Hiring kids in africa and india to create accounts for 2 cents an hour. NEWS POST 1 POST TWO

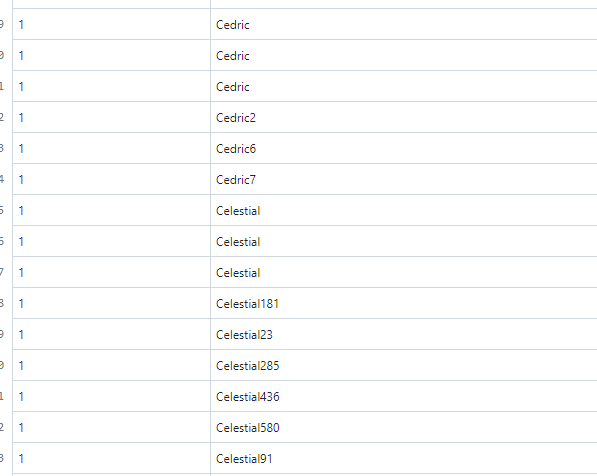

- Lemmy is EXTREMELY trusting. For example, go look at the stats for my instance online… (lemmyonline.com) I can assure you, I don’t have 30k users and 1.2 million comments.

- There is no built-in “real-time” methods for admins via the UI to identify suspicious activity from their users, I am only able to fetch this data directly from the database. I don’t think it is even exposed through the rest api.

What can happen if we don’t identify a solution.

We know meta wants to infiltrate the fediverse. We know reddits wants the fediverse to fail.

If, a single user, with limited technical resources can manipulate that content, as was proven above-

What is going to happen when big-corpo wants to swing their fist around?

Edits

- Removed most of the images containing instances. Some of those issues have already been taken care of. As well, I don’t want to distract from the ACTUAL problem.

- Cleaned up post.

@xtremeownage

I think that one of the most difficult things to deal with more common bots, spamming, reposting, etc.

Is that parsing all the commentary and dealing with it on a service wide level is really hard to do, in terms of computing power and sheer volume of content. Seems to me that do this on an instance level with user numbers in the 10’s of thousands is a heck of a lot more reasonable than doing it on a 10’s of millions of users service.

What I’m getting at is that this really seems like something that could (maybe even should) be built into the instance moderation tools, at least some method of marking user activity as suspicious for further investigation by human admins/mods.

We’re really operating on the assumption that people spinning up instances are acting in good faith, until they prove that they aren’t, I think the first step is giving good faith actors the tools to moderate effectively, then worrying about bad faith admins.

I agree with this 110%

Yeah. A bad faith admin can bypass pretty much whatever they want. They can even leave captchas and such on to give the impression that captchas aren’t working, because they can just create accounts through direct database access (which can instantly create as many accounts as you want).

Without some central or trusted way to ensure that accounts are created only while jumping through the right hoops, it’s utterly trivial to create bots.

Perhaps it’s even a sign that we shouldn’t bother trying to stop bots at sign up. We could instead have something like when you post on a server, that server will send you a challenge (such a captcha) and you must solve the challenge for the post to go through. We could require that for the first time you post on each server, but to keep it from being really annoying, we could make it so that servers can share that they verified an account and we’d only acknowledge that verification if it comes from a manually currated set of trusted servers.

In other words, rather than a captcha on sign up, you’d see a captcha when you first go to post/vote/etc on the first major server (or each server until you interact with a major one). If you signed up on a trusted server in the first place, you won’t have to do this.

Though honestly that’s still pretty annoying and is a downside to federation. Another option is to semi centralize the signup, with trusted servers doing the signup for untrusted ones.