The reporting errors were presumably a consequence of the results tapes not being programmed to a format that was compatible with state reporting requirements. Attempts to correct this issue appear to have created errors. The reporting errors did not consistently favor one party or candidate but were likely due to a lack of proper planning, a difficult election environment, and human error.

…

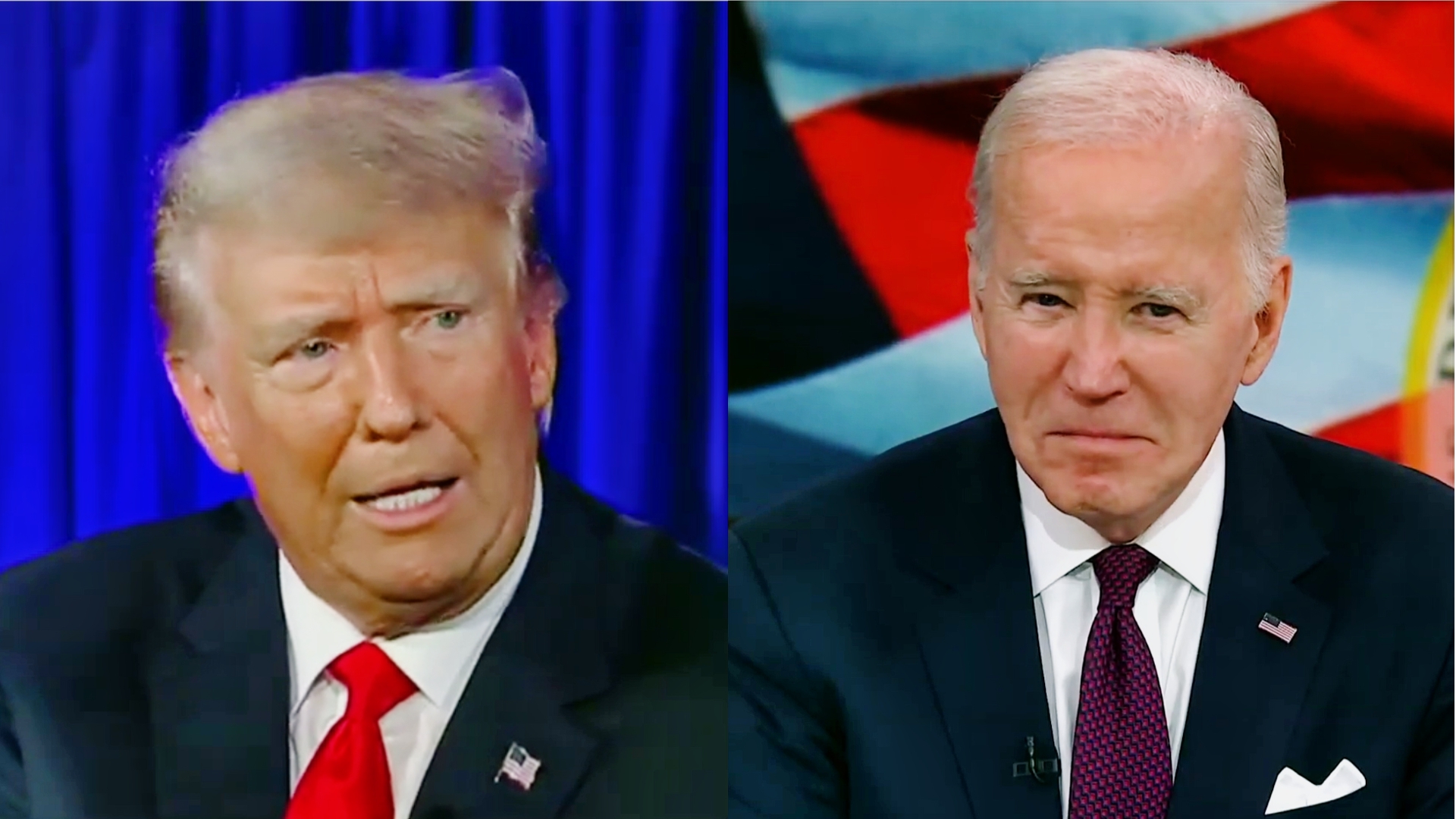

The discrepancies did not affect the outcome of any of the elections, but they did add to a growing list of defeats for Trump’s election lies.

It’s not that easy… There’s a bunch of proprietary systems, each adapted to dozens of specific regulations.

Id say every 200 lines or so of code you make a mistake. Sometimes you hit the wrong key, sometimes you have a brain fart, sometimes there’s some complex interplay that leads to occasional issues.

A good programmer catches like 90% of them. Giving time for self-testing catches another few percent. A rock solid design with high automated test coverage might cut mistakes by a factor of 10. Add in great qa (a low paid role with low status and easily cut for profit) and you might get 90% of whatever is left

There’s no perfect code, and if you have to interface with another company jealously guarding their own implementation? They might give you a sample file and a short format definition. Is it correct? Can everyone read the spec and come away with the same understanding? Is there room for a bad actor to put their finger on the scales?

It’s just beyond human capability to write perfect code, even in the perfect environment. And the ideal solution is redundancy - ideally you’d have like 5 teams running very different software in parallel for each vote and count, and you’d debug any situation where they don’t all agree and recount.

But that would be ungodly expensive and politically impossible… There’s room to do better, but there’s no money in it

See, this is dumb. Not you, I mean. Program to format text documents and automate office paperwork, sure. I prefer TeX but YMMV. But how do you commercialise voting? Oh, “it’s America”. I guess.

There was a good Tom Scott that pointed out the problem of electronic voting is not just the reliability and trustworthiness of the systems, but the transparency for ordinary people to know it’s trustworthy.

You can’t achieve that perfectly with computers - not even with paper - but it’s important. Obviously to me, that would mean having the system open source, with closed (for extra security) extra measures for auditing. I understand them not doing that, but the government not wanting the voting software and systems to be fully open to government? That just blows the mind …though I kind of expected it.

As to redundancy and lack of bugs, there are some impressive programs out there by people who’ve got what it takes to make things very precise from formal definitions and so on (cf some of the Functional Programming community, amongst others, and certain un-bloated well-defined security things). Does the American government not have access to such people? Did they spend all their money on TV shows so they’ve none left for good programmers? And tallying election votes is not really the most elaborate of tasks, more one that needs formally defining very carefully. No ML professors left over from the dark ages who can write a formal spec as a PhD? How about all those NSA lot who are supposed to be able to make spyware so clever it slips between the cracks in Apple phones? I suppose that’s a field more of try lots of things and hope some work, but still.

Sorry, rant over ;-)

The source being open doesn’t mean you can tamper with it. It means you can see it (in this case).

Did you mean “can’t”?

Open source lets the general public* audit it to know exactly what it’s doing. So much of the world revolves around layers of secrecy and convenience-trust. Elections should not be so!

*Or members thereof

I meant “can”. If the repo is read only you can’t change the code.

Okay. I think I missed your meaning a bit then.

Agreed for sure. Open source doesn’t (by its openness) mean people can tamper with it.

Hard agree… It’s sheer madness.

There’s two huge problems though. Requirements for voting machines are created by people who don’t understand technology, which means we have a ton of arbitrary rules for how they work. Likely largely written by voting machine manufacturers trying to create barriers of entry

Second… There’s programmers who write really good code, and there’s programmers who think flawless code is possible for humans. There’s no overlap.

If you encounter a programmer who thinks they can write flawless code, they’ve never released anything to the public.

The thing is, humans cannot handle the full problem space of anything more useful than a calculator. The being that can do that is to a human as we are to a cicada.

We envision how it will work, we test it with that bias, and we hand it to someone who sees the world very differently and they instantly break it.

I don’t know why I keep coming across this opinion on lemmy lately… But let’s use the example of key crypto libraries, I think it’s just about the best example you could give.

I agree, they’re pretty damn rock solid. They’re based on math, with pre designed inputs and outputs. We trust it so much that the first thing we tell a programmer that needs to encrypt something “NEVER roll your own”. The entire world is based around it, we trust everything to it.

Pick a crypto library and look at their security patches. They’ve been broken mathematically, they’ve been broken due to typos, they’ve been broken due to hardware interactions or assumptions about the amount of computing power we think will be feasible in the next decade.

We know they’re defeatable.

The reason why we trust them so much isn’t because they’re flawless, it’s because they’re constantly under a spotlight. There’s a lot of money and reputation to be gained across several fields just devoted to this one issue.

We trust them, because we can’t do better.

Voting machine software is terrible, and we could do much better - simplification of requirements, parallel implementations from isolated teams looking for discrepancies, standardizing the requirements (worldwide even), review by unrelated subject matter experts… Hell, we should be using them for everything from grade school elections to stockholder votes, and posting huge bug bounties. They should be open source from top to bottom - the second rule of security is “security through obscurity is obfuscation, and obfuscation isn’t security”

There’s a ton we could do to make it better, but most every bug is a wild edge case - the recent investigation results in Virginia suggest it works correctly more than 99.9991% of the time. Let’s call it 99.99% to account for anything undiscovered

There’s no way a new project does better than that - this was human error due to being confused over the district borders… New software isn’t going to fix that

The only way to get down to single digit margin of errors is to deploy it widely and frequently, add in more redundancy, and patch the crazy edge cases as they come up.

And most importantly, we need to simplify the problem and look at the human elements

Redundancy and mitigation is all there is.

Fun fact, we discovered that cosmic rays flip bits at a predictable rate on every modern computer because of a voting machine showing impossible results… We can mitigate even that with redundancy and error checks, but the only way to approach perfection is to reduce single points of failure and learn by proactively looking for the holes to patch