- cross-posted to:

- hackernews@derp.foo

- cross-posted to:

- hackernews@derp.foo

Data poisoning: how artists are sabotaging AI to take revenge on image generators::As AI developers indiscriminately suck up online content to train their models, artists are seeking ways to fight back.

This system runs on the assumption that A) massive generalized scraping is still required B) You maintain the metadata of the original image C) No transformation has occurred to the poisoned picture prior to training(Stable diffusion is 512x512). Nowhere in the linked paper did they say they had conditioned the poisoned data to conform to the data set. This appears to be a case of fighting the last war.

It is likely a typo, but “last AI war” sounds ominous 😅

Takes image, applies antialiasing and resize

Oh, look at that, defeated by the completely normal process of preparing the image for training

Unfortunately for them there’s a lot of jobs dedicated to cleaning data so I’m not sure if this would even be effective. Plus there’s an overwhelming amount of data that isn’t “poisoned” so it would just get drowned out if never caught

Imagine if writers did the same things by writing gibberish.

At some point, it becomes pretty easy to devalue that content and create other systems to filter it.

if writers did the same things by writing gibberish.

Aka, “X”

I mean isn’t that eventually going to happen? Isn’t ai going to eventually learn and get trained from ai datasets and small issues will start to propagate exponentially?

I just assume we have a clean dataset preai and messy gross dataset post ai… If it keeps learning from the latter dataset it will just get worse and worse, no?

deleted

Not really. It’s like with humans. Without the occasional reality checks it gets weird, but what people chose to upload is a reality check.

The pre-AI web was far from pristine, no matter how you define that. AI may improve matters by increasing the average quality.

nightshade and glaze never worked. its scam lol

Shhhhh.

Let them keep doing the modern equivalent of “I do not consent for my MySpace profile to be used for anything” disclaimers.

It keeps them busy on meaningless crap that isn’t actually doing anything but makes them feel better.

Artists and writers should be entitled to compensation for using their works to train these models, just like any other commercial use would. But, you know, strict, brutal free-market capitalism for us, not the mega corps who are using it because “AI”.

Let’s see how long before someone figures out how to poison, so it returns NSFW Images

You can create NSFW ai images already though?

Or did you mean, when poisoned data is used a NSFW image is created instead of the expected image?

Definitely the last one!

companies would stumble all over themselves to figure out how to get it to stop doing that before going live. source: they already are. see bing image generator appending “ethnically ambiguous” to every prompt it receives

it would be a herculean if not impossible effort on the artists’ part only to watch the corpos scramble for max 2 weeks.

when will you people learn that you cannot fight AI by trying to poison it. there is nothing you can do that horny weebs haven’t already done.

It can only target open source, so it wouldn’t bother corpos at all. The people behind this object to not everything being owned and controlled. That’s the whole point.

The Nightshade poisoning attack claims that it can corrupt a Stable Diffusion in less than 100 samples. Probably not to NSFW level. How easy it is to manufacture those 100 samples is not mentioned in the abstract

yeah the operative word in that sentence is “claims”

I’d love nothing more than to be wrong, but after seeing how quickly Glaze got defeated (not only did it make the images nauseating for a human to look at despite claiming to be invisible, not even 48 hours after the official launch there was a neural network trained to reverse its effects automatically with like 95% accuracy), suffice to say my hopes aren’t high.

You seem to have more knowledge on this than me, I just read the article 🙂

This doesn’t actually work. It doesn’t even need ingestion to do anything special to avoid.

Let’s say you draw cartoon pictures of cats.

And your friend draws pointillist images of cats.

If you and your friend don’t coordinate, it’s possible you’ll bias your cat images to look like dogs in the data but your friend will bias their images to look like horses.

Now each of your biasing efforts become noise and not signal.

Then you need to consider if you are also biasing ‘cartoon’ and ‘pointillism’ attributes as well, and need to coordinate with the majority of other people making cartoon or pointillist images.

When you consider the number of different attributes that need to be biased for a given image and the compounding number of coordinations that would need to be made at scale to be effective, this is just a nonsense initiative that was an interesting research paper in lab conditions but is the equivalent of a mouse model or in vitro cancer cure being taken up by naturopaths as if it’s going to work in humans.

So it sounds like they are taking the image data and altering it to get this to work and the image still looks the same just the data is different. So, couldn’t the ai companies take screenshots of the image to get around this?

Not even that, they can run the training dataset through a bulk image processor to undo it, because the way these things work makes them trivial to reverse. Anybody at home could undo this with GIMP and a second or two.

In other words, this is snake oil.

The general term for this is adversarial input, and we’ve seen published reports about it since 2011 when ot was considered a threat if CSAM could be overlayed with secondary images so they weren’t recognized by Google image filters or CSAM image trackers. If Apple went through with their plan to scan private iCloud accounts for CSAM we may have seen this development.

So far (AFAIK) we’ve not seen adversarial overlays on CSAM though in China the technique is used to deter trackng by facial recognition. Images on social media are overlaid by human rights activists / mischief-makers so that social media pics fail to match secirity footage.

The thing is like an invisible watermark, these processes are easy to detect (and reverse) once users are aware they’re a thing. So if a generative AI project is aware that some images may be poisoned, it’s just a matter of adding a detection and removal process to the pathway from candidate image to training database.

Similarly, once enough people start poisoning their social media images, the data scrapers will start scaning and removing overlays even before the database sets are sold to law enforcement and commercial interests.

Feeding garbage to the garbage. How fitting.

It’s mind boggling how many people think the artists are the bad guys in this mess while their only arguments are that if you post something on the internet then expect it to be used. I even read a comment that said “art should just remain your hobby”. Firstly, without artists you wouldn’t have AI “art”. Secondly, I think you are forgetting that music is an art as well, how is it possible that AI music is done ethically and with regards to copyright? Why are you so angry about artists that draw trying to protect their work but not about artists that make music?

selftldr

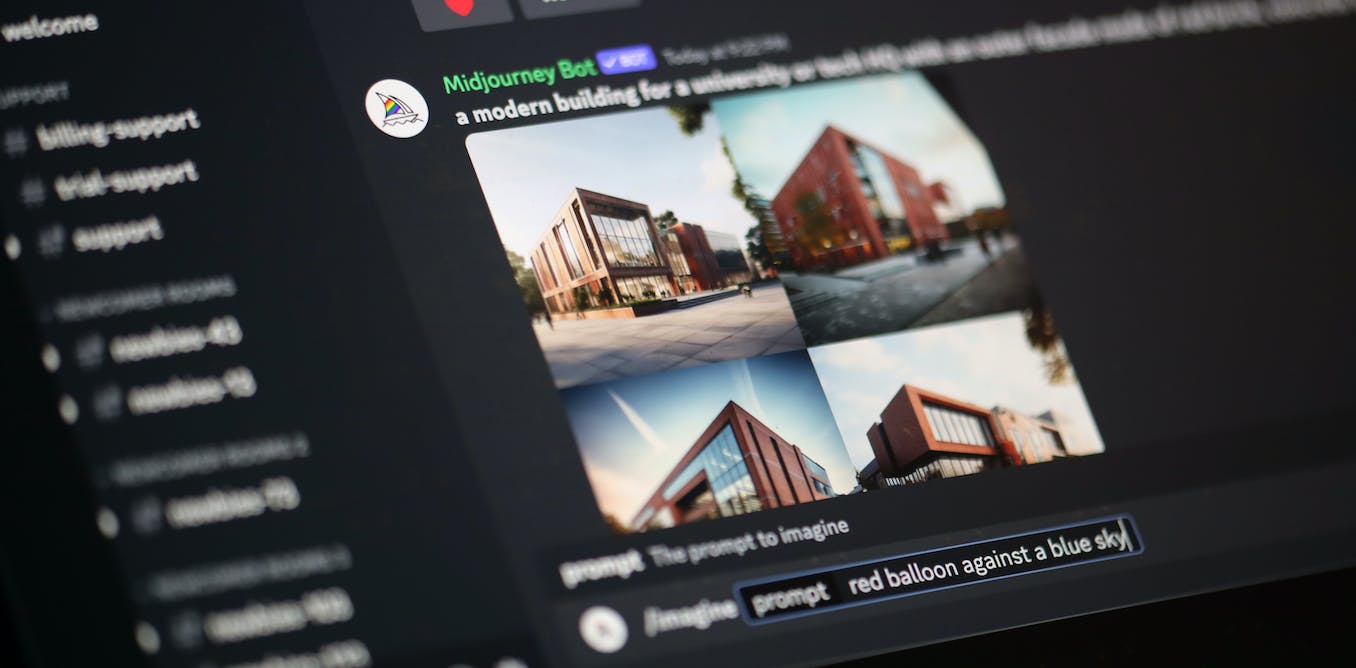

Researchers who want to empower individual artists have recently created a tool named “Nightshade” to fight back against unauthorised image scraping. The tool works by subtly altering an image’s pixels in a way that wreaks havoc to computer vision but leaves the image unaltered to a human’s eyes.

We need tools to detect poisoned datasets.

Fighting the uphill battle to irrelevance …

Man, whenever I start getting tired by the amount of Tankies on Lemmy, the linux users and decent AI takes in users rejuvenates me. The rest of the internet has jumped full throttle on the AI hate train

The “AI hate train” is people who dislike being replaced by machines, forcing us further into the capitalist machine rather than enabling anyone to have a better life

No disagreement, but it’s like hating water because the capitalist machine used to run water mills. It’s a tool, what we hate is the system and players working to entrench themselves and it. Should we be concerned about the people affected? Yes, of course, we always should have been, even before it was the “creative class” and white collar workers at risk. We should have been concerned when it was blue collar workers being automated or replaced by workers in areas with repressive regimes. We should have been concerned when it was service workers being increasingly turned into replaceable cogs.

We should do something, but people are titling at windmills instead of the systems that oppress people. We should be pushing for these things to be public goods (open source like stability is aiming for, distributed and small models like Petals.dev and TinyML). We should be pushing for unions to prevent the further separation of workers from the fruits of their labor (look at the Writer’s Guild’s demands during their strike). We should be trying to only deal with worker and community cooperatives so that innovations benefit workers and the community instead of being used against them. And much more! It’s a lot, but it’s why I get mad about people wasting their time being made AI tools exist and raging against them instead of actually doing things to improve the root issues.

The “AI hate train” runs on fear and skips stops for reason, headed for a fictional destination.

Not saying that there aren’t people like that, but this ain’t it. This tool specifically targets open source. The intention is to ruin things that aren’t owned and controlled by someone. A big part of AI hate is hyper-capitalist like that, though they know better than saying it openly.

People hoping for a payout get more done than people just being worried or frustrated. So it’s hardly a surprise that they get most of the attention.

Thing is, its capitalism thats our enemy, not the tech that is freeing us up from labour. Its not the tech thats the problem, its our society, and if fucking sucks that I’m just as poor as the rest of you, but because I finally have a tool that lets me satisfactorily lets me access my creativity, I’m being villianized by the art community, even though the tech I am using is open source and no capitalist is profiting off of me