Testing my new comfy workflow.

Konsi!

Needs more priest gear in white though.

i just started to learn ComfyUI.

it’s much more efficient than auto1111 that’s for sure…i have 8 it/s on auto1111 and around 12 on comfy. But i have to learn the tricks first^^ my workflow is super basic as of right now

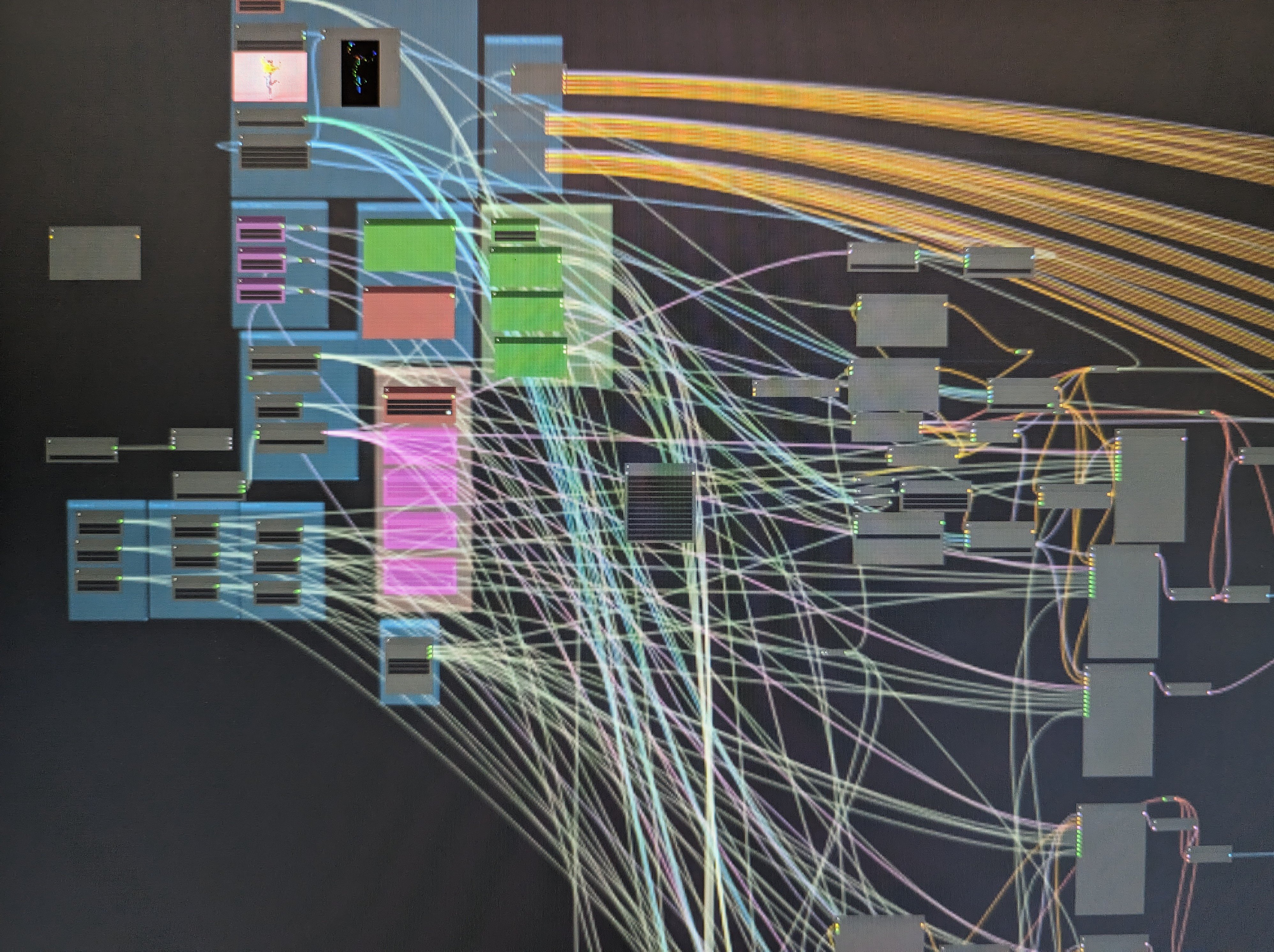

It can and will become complex ;-)

jeez…what the fuck is even happening there?!

A lot:

-

optional openpose controlnet

-

optional prompt cutoff

-

optional Lora stacker

-

optional face detailer

-

optional upscaler

-

optional negative embeddings (positive embeddings not yet implemented)

The prompt goes with the selected options into 3 models who generate 3 images. Every image variant per model can have its own parameters like sampler or steps.

Do you combine those three images after that or do you simply produce three seperate images with each run then? also afaik positive embeddings are implemented already.

I generate in total 9 images, 3 per model.

Which then go into the detailer and upscale pipeline (optional).

Embeddings: I use a embedding picker node which appends the embedding to the prompt as I am to lazy to look up the embedding filenames. I just pick them from a list. You can stack the node and add multiple embeddings this way without the embedding:[name] hassle.

so it wouldn’t look that complex if you only did one image, right? :-D

Do you know if any extension exist that allows me to have a “gallery” for Lora, Hypernetworks and embedding just like a1111 does? I really really like that i could have example images to show me what a specific lora or whatever will do with the style…i really miss that in comfyui

A) correct but I like to get multiple variants and then to proceed with the seed / model / sampler which gives the best results

B) you could create a gallery of your embeddings by your self with an x/y workflow from the efficiency nodes. I will look into it and send you an update in this thread

-