At Northwell Health, executives are encouraging clinicians and all 85,000 employees to use a tool called AI Hub, according to a presentation obtained by 404 Media.

Photo by Luis Melendez / Unsplash

Northwell Health, New York State’s largest healthcare provider, recently launched a large language model tool that it is encouraging doctors and clinicians to use for translation, sensitive patient data, and has suggested it can be used for diagnostic purposes, 404 Media has learned. Northwell Health has more than 85,000 employees.

An internal presentation and employee chats obtained by 404 Media shows how healthcare professionals are using LLMs and chatbots to edit writing, make hiring decisions, do administrative tasks, and handle patient data.

In the presentation given in August, Rebecca Kaul, senior vice president and chief of digital innovation and transformation at Northwell, along with a senior engineer, discussed the launch of the tool, called AI Hub, and gave a demonstration of how clinicians and researchers—or anyone with a Northwell email address—can use it.

AI Hub “uses [a] generative LLM, used much like any other internal/administrative platform: Microsoft 365, etc. for tasks like improving emails, check grammar and spelling, and summarizing briefs,” a spokesperson for Northwell told 404 Media. “It follows the same federal compliance standards and privacy protocols for the tools mentioned on our closed network. It wasn’t designed to make medical decisions and is not connected to our clinical databases.”

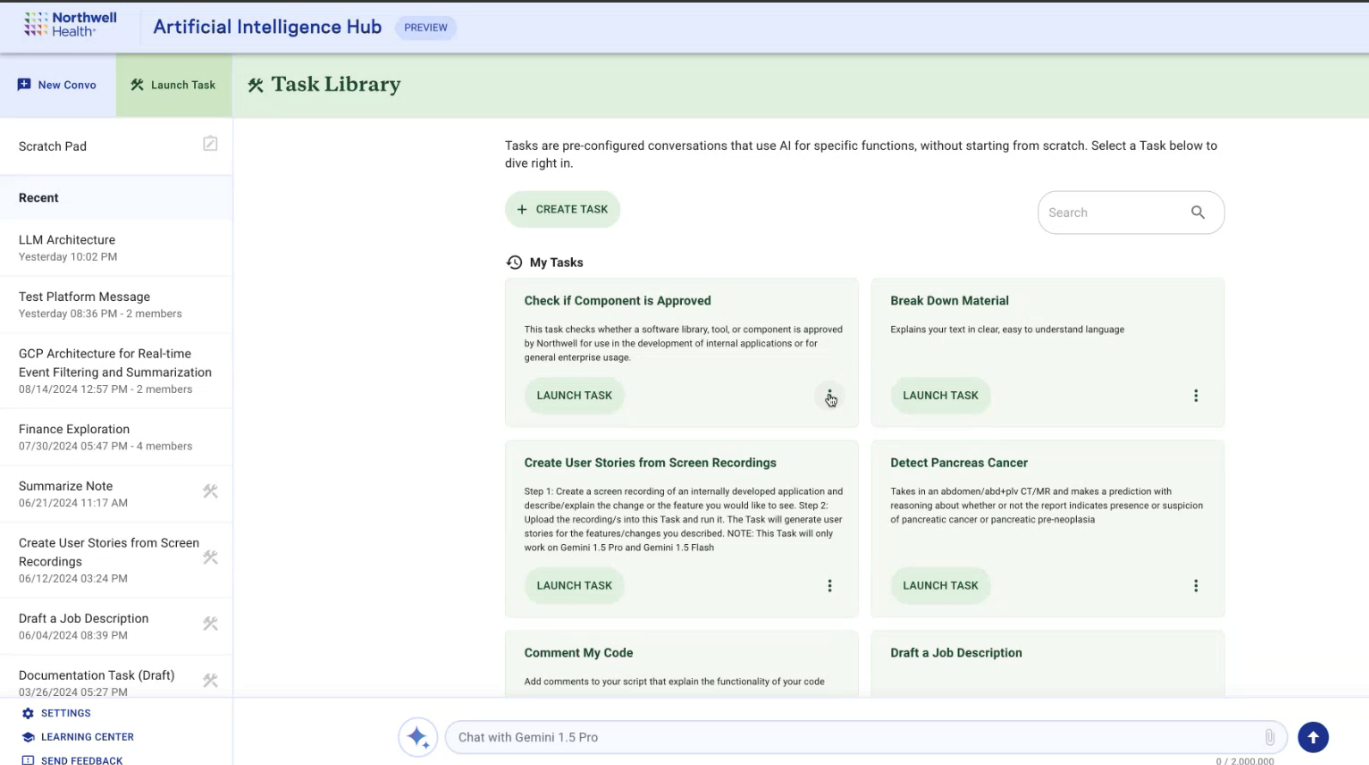

A screenshot from a presentation given to Northwell employees in August, showing examples of “tasks.”

But the presentation and materials viewed by 404 Media include leadership saying AI Hub can be used for “clinical or clinical adjacent” tasks, as well as answering questions about hospital policies and billing, writing job descriptions and editing writing, and summarizing electronic medical record excerpts and inputting patients’ personally identifying and protected health information. The demonstration also showed potential capabilities that included “detect pancreas cancer,” and “parse HL7,” a health data standard used to share electronic health records.

The leaked presentation shows that hospitals are increasingly using AI and LLMs to streamlining administrative tasks, and shows that some are experimenting with or at least considering how LLMs would be used in clinical settings or in interactions with patients.

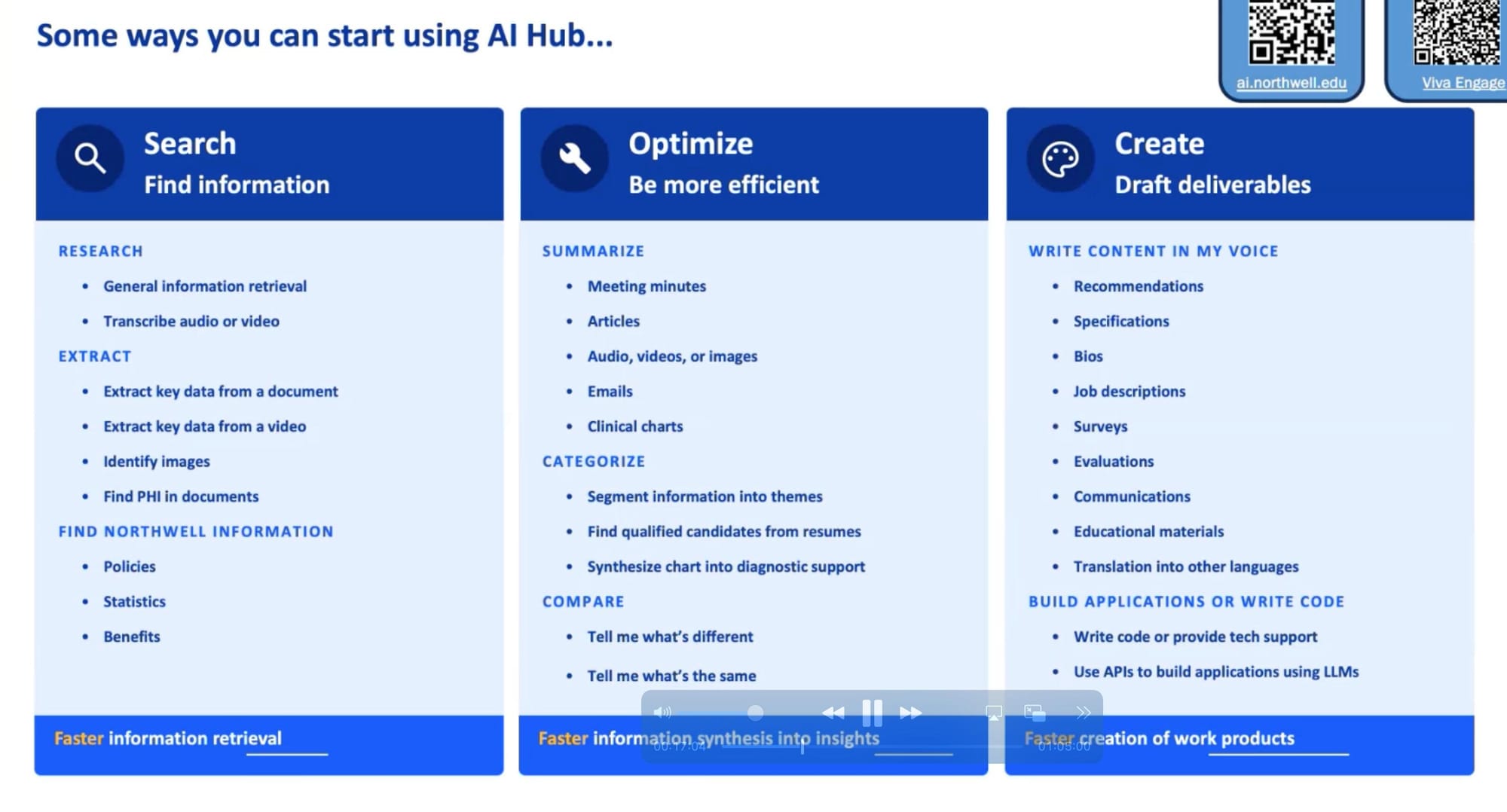

A screenshot from a presentation given to Northwell employees in August, showing the ways they can use AI Hub.

In Northwell’s internal employee forum someone asked if they can use PHI, meaning protected health information that’s covered by HIPAA, in AI Hub. “For example we are wondering if we can leverage this tool to write denial appeal letters by copying a medical record excerpt and having Al summarize the record for the appeal,” they said. “We are seeing this as being developed with other organizations so just brainstorming this for now.”

A business strategy advisor at Northwell responded, “Yes, it is safe to input PHI and PII [Personally Identifiable Information] into the tool, as it will not go anywhere outside of Northwell’s walls. It’s why we developed it in the first place! Feel free to use it for summarizing EMR [Electronic Medical Record] excerpts as well as other information. As always, please be vigilant about any data you input anywhere outside of Northwell’s approved tools.”

AI Hub was released in early March 2024, the presenters said, and usage had since spread primarily through word of mouth within the company. By August, more than 3,000 Northwell employees were using AI Hub, they said, and leading up to the demo it was gaining as many as 500 to 1,000 new users a month.

During the presentation, obtained by 404 Media and given to more than 40 Northwell employees—including physicians, scientists, and engineers—Kaul and the engineer demonstrated how AI Hub works and explained why it was developed. Introducing the tool, Kaul said that Northwell saw examples where external chat systems were leaking confidential or corporate information, and that corporations were banning use of “the ChatGPTs of the world” by employees.

“And as we started to discuss this, we started to say, well, we can’t shut [the use of ChatGPT] down if we don’t give people something to use, because this is exciting technology, and we want to make the best of it,” Kaul said in the presentation. “From my perspective, it’s less about being shut down and replaced, but it’s more about, how can we harness the capabilities that we have?”

Throughout the presentation, the presenters suggested Northwell employees use AI Hub for things like questions about hospital policies and writing job descriptions or editing writing. At one point she said “people have been using this for clinical chart summaries.” She acknowledged that LLMs are often wrong. “That, as this community knows, is sort of the thing with gen AI. You can’t take it at face value out of the box for whatever it is,” Kaul said. “You always have to keep reading it and reviewing any of the outputs, and you have to keep iterating on it until you get the kind of output quality that you’re looking for if you want to use it for a very specific purpose. And so we’ll always keep reinforcing, take it as a draft, review it, and you are accountable for whatever you use.”

The tool looks similar to any text-based LLM interface: a text box at the bottom for user inputs, the chatbot’s answers in a window above that, and a sidebar showing the users’ recent conversations along the left. Users can choose to start a conversation or “launch a task.” The examples of tasks presenters gave in their August demo included administrative ones, like summarizing research materials, but also detecting cancer and “parse HL7,” which stands for Health Level 7, an international health data standard that allows hospitals to share patient health records and data with each other securely and interoperably.

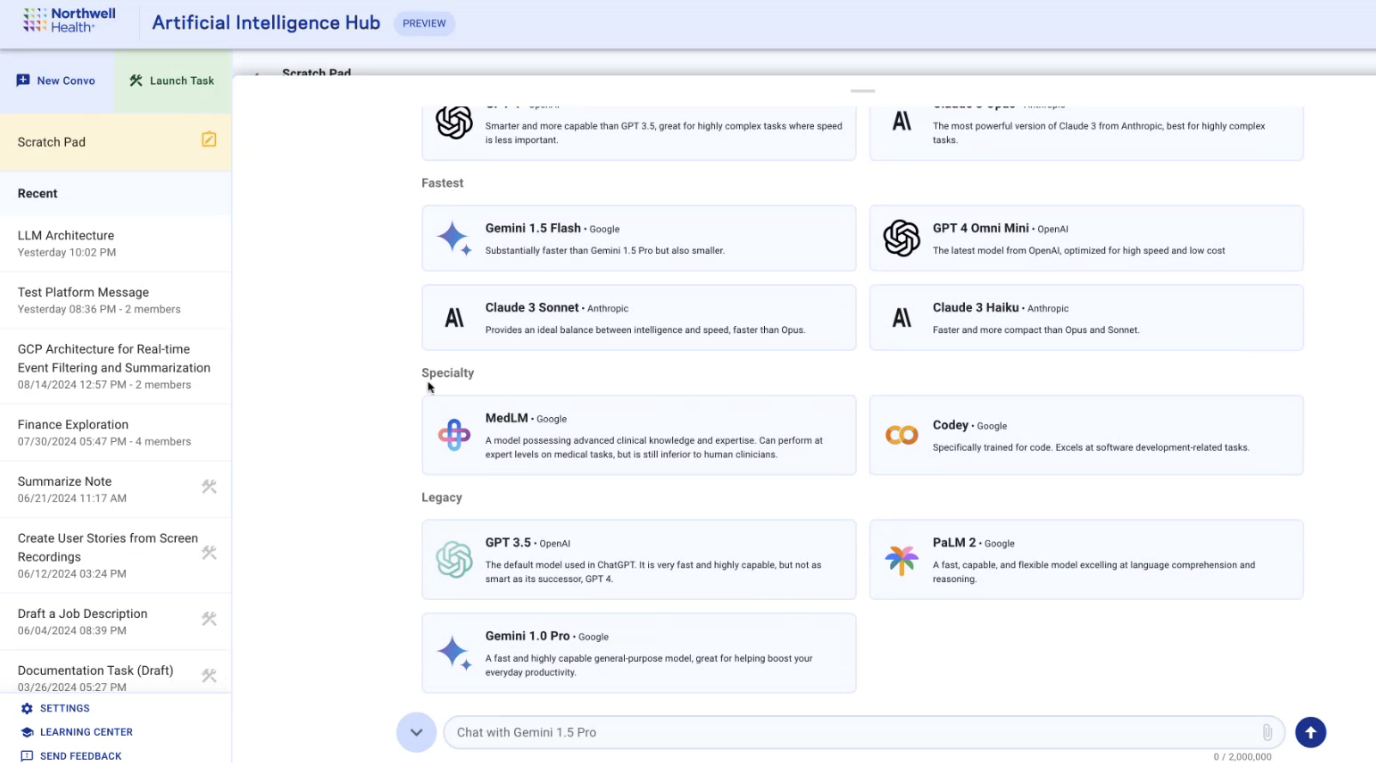

They can also choose from one of 14 different models to interact with, including Gemini 1.5 Pro, Gemini 1.5 Flash, Claude 3.5 Sonnet, GPT 4 Omni, GPT 4, GPT 4 Omni Mini, Codey, Claude 3 Opus, Claude 3 Sonnet, Claude 3 Haiku, GPT 3.5, PaLM 2, Gemini 1.0 Pro, and MedLM,

MedLM is a Google-developed LLM designed for healthcare. An information box for MedLM in AI Hub calls it “a model possessing advanced clinical knowledge and expertise. Can perform at expert levels on medical tasks, but is still inferior to human clinicians.”

A screenshot from a presentation given to Northwell employees in August, showing the different LLMs available to choose from.

Tasks are saved prompts, and the examples the presenters gives in the demo include “Break down material” and “Comment My Code,” but also includes “Detect Pancreas Cancer,” with the description “Takes in an abdomen/adb+plv CT/MR and makes and prediction with reasoning about whether or not the report indicates presence or suspicious of pancreatic cancer or pancreatic pre-neoplasia.”

Tasks are private to individual users to start, but can be shared to the entire Northwell workforce. Users can submit a task for review by “the AI Hub team,” according to text in the demo. At the time of the demo, documents uploaded directly to a conversation or task expired in 72 hours. “However, once we make this a feature of tasks, where you can save a task with a document, make that permanent, that’ll be a permanently uploaded document, you’ll be able to come back to that task whenever, and the document will still be there for you to use,” the senior engineer said.

AI Hub also accepts uploads of photos, audio, videos and files like PDFs in Gemini 1.5 Pro and Flash, which is a feature that has been “heavily requested” and is “getting a lot of use,” the presenters said. To demonstrate that feature, he uploaded a 58-page PDF about how to remotely monitor patients and asked Gemini 1.5 Pro “what are the billing aspects?” which the model summarizes from the document.

Another one of the uses Northwell suggests for AI Hub is hiring. In the demo, the engineer uploaded two resumes, and asked the model to compare them. Workplaces are increasingly using AI in hiring practices, despite warnings that it can worsen discrimination and systemic bias. Last year, the American Civil Liberties Union wrote that the use of AI poses “an enormous danger of exacerbating existing discrimination in the workplace based on race, sex, disability, and other protected characteristics, despite marketing claims that they are objective and less discriminatory.

At one point in the demo, a radiologist asked a question: “Is there any sort of medical or ethical oversight on the publication of tasks?” They imagined a scenario where someone chooses a task, they said, thinking it does one thing but not realizing it’s meant to do another, and receiving inaccurate results from the model. “I saw one that was, ‘detect pancreas cancer in a radiology report.’ I realize this might be for play right now, but at some point people are going to start to trust this to do medical decision making.”

The engineer replied that this is why tasks require a review period before being published to the rest of the network. “That review process is still being developed… Especially for any tasks that are going to be clinical or clinical adjacent, we’re going to have clinical input on making sure that those are good to go and that, you know, [they are] as unobjectionable as possible before we roll those out to be available to everybody. We definitely understand that we don’t want to just allow people to kind of publish anything and everything to the broader community.”

According to a report by National Nurses Unitedwhich surveyed 2,300 registered nurses and members of NNU from January to March 2024, 40 percent of respondents said their employer “has introduced new devices, gadgets, and changes to the electronic health records (EHR) in the past year.” As with almost every industry around the world, there’s a race to adopt AI happening in hospitals, with investors and shareholders promising a healthcare revolution if only networks adopt AI. “We are at an inflection point in AI where we can see its potential to transform health on a planetary scale,” Karen DeSalvo, Google Health’s chief health officer, said at an event earlier this year for the launch of MedLM’s chest x-ray capabilities and other updates. “It seems clear that in the future, AI won’t replace doctors, but doctors who use AI will replace those who don’t.” Some studies show promising results in detecting cancer using AI models, including when used to supplement radiologists’ evaluations of mammograms in breast cancer screenings, and early detection of pancreatic cancer.

“Everybody fears that it will release some time for clinicians, and then, instead of improving care, they’ll be expected to do more things, and that won’t really help"

But patients aren’t buying it yet. A 2023 report by Pew Research found that 54 percent of men and 66 percent of women said they would be uncomfortable with the use of AI “in their own health care to do things like diagnose disease and recommend treatments.”

A Northwell employee I spoke to about AI Hub told me that as a patient, they would want to know if their doctors were using AI to inform their care. “Given that the chats are monitored, if a clinician uploads a chart and gets a summary, the team monitoring the chat could presumably read that summary, even if they can’t read the chart,” they said. (Northwell did not respond to a question about who is able to see what information in tasks.)

“This is new. We’re still trying to build trust,” Vardit Ravitsky, professor of bioethics at the University of Montreal, senior lecturer at Harvard Medical School and president of the Hastings Center, told me in a call. “It’s all experimental. For those reasons, it’s very possible the patients should know more rather than less. And again, it’s a matter of building trust in these systems, and being respectful of patient autonomy and patients’ right to know.”

Healthcare worker burnout—ostensibly, the reason behind automating tasks like hiring, research, writing and patient intake, as laid out by the AI Hub team in their August demo—is a real and pressing issue. According to industry estimates, burnout could cost healthcare systems at least $4.6 billion annually. And while reports of burnout were down overall in 2023 compared to previous years (during which a global pandemic happened and burnout was at an all-time high) more than 48 percent of physicians “reported experiencing at least one symptom of burnout,” according to the American Medical Association (AMA).

“A source of that stress? More than one-quarter of respondents said they did not have enough physicians and support staff. There was an ongoing need for more nurses, medical assistants or documentation assistance to reduce physician workload,” an AMA reportbased on a national survey of 12,400 responses from physicians across 31 states at 81 health systems and organizations said. “In addition, 12.7% of respondents said that too many administrative tasks were to blame for job stress. The lack of support staff, time and payment for administrative work also increases physicians’ job stress.”

There could be some promise in AI for addressing administrative burdens on clinicians. A recent (albeit small and short) study found that using LLMs to do tasks like drafting emails could help with burnout. Studies show that physicians spend between 34 to 55 percent of their work days “creating notes and reviewing medical records in the electronic health record (EHR), which is time diverted from direct patient interactions,” and that administrative work includes things like billing documentation and regulatory compliance.

“The need is so urgent,” Ravitsky said. “Clinician burnout because of note taking and updating records is a real phenomenon, and the hope is that time saved from that will be spent on the actual clinical encounter, looking at the patient’s eyes rather than at a screen, interacting with them, getting more contextual information from them, and they would actually improve clinical care.” But this is a double-edged sword: “Everybody fears that it will release some time for clinicians, and then, instead of improving care, they’ll be expected to do more things, and that won’t really help,” she said.

There’s also the matter of cybersecurity risks associated with putting patient data into a network, even if it’s a closed system.

“I would be uncomfortable with medical providers using this technology without understanding the limitations and risks"

Blake Murdoch, Senior Research Associate at the Health Law Institute in Alberta, told me in an email that if it’s an internal tool that’s not sending data outside the network, it’s not necessarily different from other types of intranet software. “The manner in which it is used, however, would be important,” he said.

“Generally we have the principle of least privilege for PHI in particular, whereby there needs to be an operational need to justify accessing a patient’s file. Unnecessary layers of monitoring need to be minimized,” Murdoch said. “Privacy law can be broadly worded so the monitoring you mention may not automatically constitute a breach of the law, but it could arguably breach the underlying principles and be challenged. Also, some of this could be resolved by automated de-identification of patient information used in LLMs, such as stripping names and assigning numbers, etc. such that those monitoring cannot trace actions in the LLM back to identifiable patients.”

As Kaul noted in the AI Hub demo, corporations are in fact banning use of “the ChatGPTs of the world.” Last year, a ChatGPT user said his account leaked other people’s passwords and chat histories. Multiple federal agencies have blocked the use of generative AI services on their networks, including the Department of Veterans Affairs, the Department of Energy, the Social Security Administration and the Agriculture Department, and the Agency for International Development warned employees not to input private data into public AI systems.

Casey Fiesler, Associate Professor of Information Science at University of Colorado Boulder, told me in a call that while it’s good for physicians to be discouraged from putting patient data into the open-web version of ChatGPT, how the Northwell network implements privacy safeguards is important—as is education for users. “I would hope that if hospital staff is being encouraged to use these tools, that there is some *significant *education about how they work and how it’s appropriate and not appropriate,” she said. “I would be uncomfortable with medical providers using this technology without understanding the limitations and risks. ”

There have been several ransomware attacks on hospitals recently, including the Change healthcare data breach earlier this year that exposed the protected health information of at least 100 million individuals, and a May 8 ransomware attack against Ascension, a Catholic health system comprised of 140 hospitals across more than a dozen states that hospital staff was still recovering from weeks later.

Sarah Myers West, co-executive director of the AI Now Institute, told 404 Media that healthcare professionals like National Nurses United have been raising the alarm about AI in healthcare settings. “A set of concerns they’ve raised is that frequently, the deployment of these systems is a pretext for reducing patient facing staffing, and that leads to real harms,” she said, pointing to a June Bloomberg report that said a Google AI tool meant to analyze patient medical records missed noting a patient’s drug allergies. A nurse caught the omission. West said that alone with privacy and security concerns, these kinds of flaws in AI systems have “life or death” consequences for patients.

Earlier this year, a group of researchers found that OpenAI’s Whisper transcription tool makes up sentences, the Associated Press reported. The researchers—who presented their work as a conference paper at the 2024 ACM Conference on Fairness, Accountability, and Transparency in June—wrote that many of Whisper’s transcriptions were highly accurate, but roughly one percent of audio transcriptions “contained entire hallucinated phrases or sentences which did not exist in any form in the underlying audio.” The researchers analyzed the Whisper-hallucinated content, and found that 38 percent of those hallucinations “include explicit harms such as perpetuating violence, making up inaccurate associations, or implying false authority,” they wrote. Nabla, an AI copilot tool marketed that recently raised $24 million in a series B round of funding, uses a combination of Microsoft’s off-the-shelf speech-to-text API and fine-tuned Whisper model. Nabla is already being used in major hospital systems including the University of Iowa.

“There are so many examples of these kinds of mistakes or flaws that are compounded by the use of AI systems to reduce staffing, where this hospital system could otherwise just adequately staff their patient beds and lead to better clinical outcomes,” West said.